Stagewise Reinforcement Learning and the Geometry of the Regret Landscape

By Elliott et al.

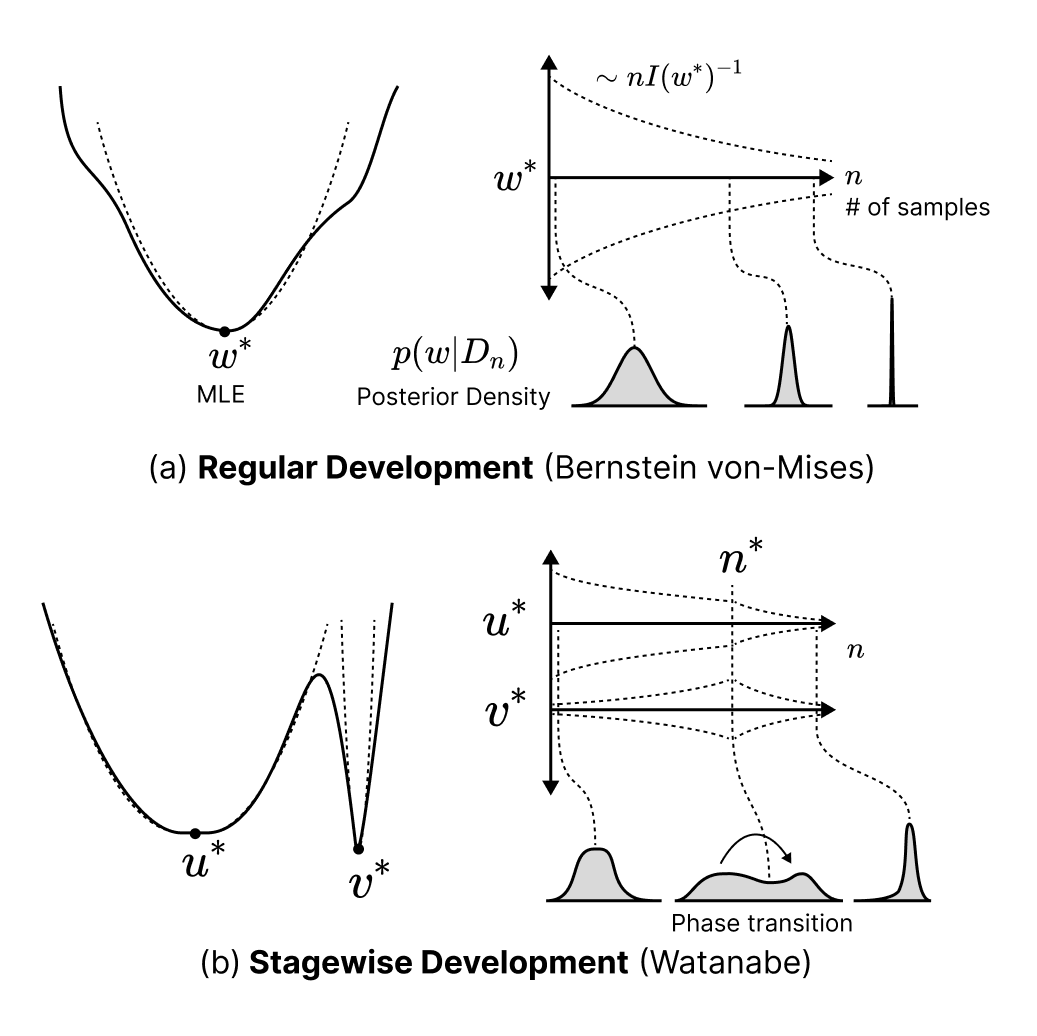

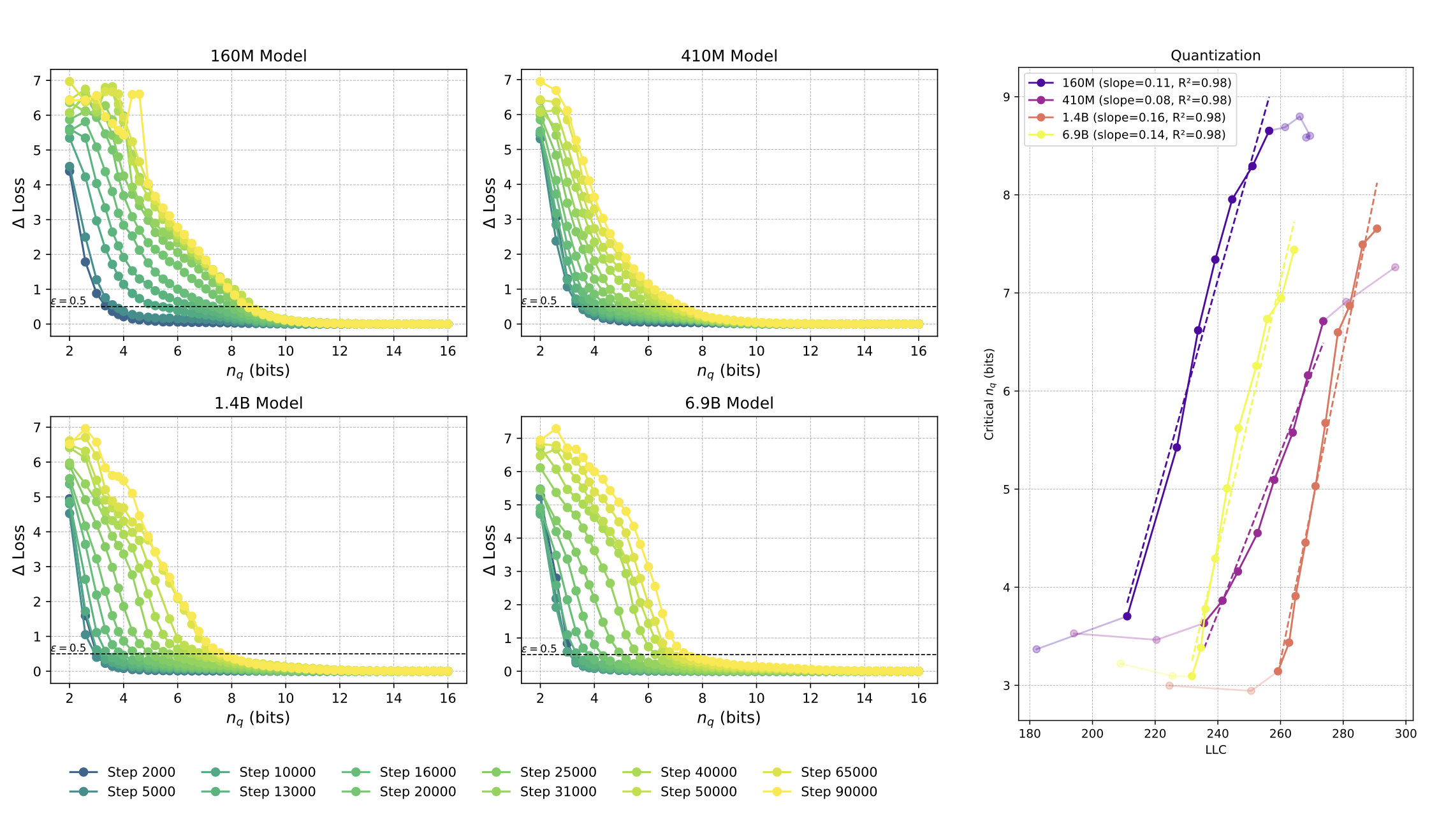

Singular learning theory characterizes Bayesian learning as an evolving tradeoff between accuracy and complexity, with transitions between qualitatively different solutions as sample size increases.