Dynamics of Transient Structure in In-Context Linear Regression Transformers

Authors

Abstract

Modern deep neural networks display striking examples of rich internal computational structure. Uncovering principles governing the development of such structure is a priority for the science of deep learning. In this paper, we explore the transient ridge phenomenon: when transformers are trained on in-context linear regression tasks with intermediate task diversity, they initially behave like ridge regression before specializing to the tasks in their training distribution. This transition from a general solution to a specialized solution is revealed by joint trajectory principal component analysis. Further, we draw on the theory of Bayesian internal model selection to suggest a general explanation for the phenomena of transient structure in transformers, based on an evolving tradeoff between loss and complexity. We empirically validate this explanation by measuring the model complexity of our transformers as defined by the local learning coefficient.

Automated Conversion Notice

Warning: This paper was automatically converted from LaTeX. While we strive for accuracy, some formatting or mathematical expressions may not render perfectly. Please refer to the original ArXiv version for the authoritative document.

1 Introduction

Why do neural networks transition between qualitatively different modes of computation during training? This phenomenon has been studied for decades in artificial and biological neural networks (Baldi & Hornik, 1989; Rogers & McClelland, 2004). Recent work on transformers has uncovered particularly salient examples of transitions between two well-characterized alternative ways of approximating the data distribution. For instance, Power et al. (2022) show a “grokking” transition from an initial memorizing solution to a generalizing solution while training transformers to perform modular arithmetic. Conversely, Singh et al. (2024) show a transition from a “transient” generalizing solution to a memorizing solution while training transformers for in-context classification.

In this paper, we study a similar transition from generalization to memorization in transformers trained for in-context linear regression. Following Raventós et al. (2023), we construct sequences with latent regression vectors (tasks) sampled uniformly from a fixed set of size (the task diversity). In this setting, Raventós et al. (2023) showed that fully trained transformers may behaviorally approximate either of two distinct in-context learning algorithms:

-

Discrete minimum mean squared error (dMMSE): The posterior mean given a uniform prior over the tasks (implies memorizing the tasks in some fashion).

-

Ridge regression (ridge): The posterior mean given a Gaussian prior from which the fixed tasks were initially sampled (independent of , generalizes to new tasks).

Moreover, Panwar et al. (2024, §6.1) showed that for intermediate values, the out-of-distribution loss of a given transformer is non-monotonic, suggesting that these transformers initially approach ridge before diverting towards dMMSE. We term this phenomenon transient ridge.

In this paper, we extend the brief analysis of Panwar et al. (2024, §6.1) and investigate the dynamics of transient ridge in detail, contributing the following.

-

In Section 4, we replicate transient ridge and we comparatively analyze the in-distribution function-space trajectories of our transformers using joint trajectory principal component analysis, revealing generalization–memorization as a principal axis of development and clarifying how the task diversity affects the dynamics.

-

In Section 5, we explain transient ridge as the transformer navigating a tradeoff between loss and complexity that evolves over training, akin to Bayesian internal model selection Watanabe, 2009, §7.6; Chen et al., 2023, and we validate this explanation by estimating the complexity of the competing solutions using the local learning coefficient (Lau et al., 2025).

These results expand our understanding of the transient ridge phenomenon and highlight the evolving loss/complexity tradeoff as a promising principle for understanding similar transience phenomena. Section 6 discusses limitations and directions for further investigation.

2 Related work

In this section, we review empirical and theoretical work on the topic of the emergence and transience of computational structure in deep learning.

Internal computational structure.

Modern deep learning has shown striking examples of the emergence of internal computational structure in deep neural networks, such as syntax trees in transformers trained on natural language (Hewitt & Manning, 2019), conceptual chess knowledge in AlphaZero (McGrath et al., 2022), and various results from mechanistic interpretability (e.g., Olah et al., 2020; Cammarata et al., 2020; Elhage et al., 2021).

It is known that properties of the data distribution influence the emergence of computational structure. For example, Chan et al. (2022) studied an in-context classification and identified data properties that are necessary for transformers to develop in-context learning abilities. Raventós et al. (2023) studied in-context linear regression (Garg et al., 2022; Akyürek et al., 2023; von Oswald et al., 2023; Bai et al., 2024) and showed that changing the task diversity of the training distribution can change the in-context learning algorithm approximated by the fully-trained transformer.

Transient structure.

In some cases, multiple interesting computational structures emerge throughout training, with different ones determining model outputs at different times. A well-known example is the “grokking” transition, in which transformers learning modular arithmetic initially memorize the mappings from the training set, before eventually generalizing to unseen examples (Power et al., 2022) using an internal addition algorithm (Nanda et al., 2023).

Conversely, Singh et al. (2024) showed that transformers trained for in-context classification (Chan et al., 2022) can gradually shift from predicting based on contextual examples to predicting memorized labels, losing the ability to generalize to new mappings. Singh et al. (2024) termed this phenomenon “transient in-context learning.”

Similarly, for in-context linear regression, Panwar et al. (2024, §6.1) observed transformers initially achieving low out-of-distribution generalization loss (indicating that they approximate ridge regression) before eventually specializing to a memorized set of tasks. In an attempt to unify terminology, we call this phenomenon “transient ridge.” Compared to Panwar et al. (2024, §6.1), our work is novel in that it offers a more in-depth empirical analysis of this phenomenon, and we also offer an explanation of the phenomenon.

Explaining transient in-context learning.

There have been attempts to explain transience in the in-context classification setting originally studied by Singh et al. (2024). Nguyen & Reddy (2024) offer a simplified model in which in-context learning is acquired more rapidly than in-weight learning, and targeted regularization of the induction mechanism can cause it to later give way to in-weight learning.

Chan et al. (2024) give an explanation based on regret bounds for in-context and in-weight learning. In their model, in-context learning emerges because it is initially more accurate than in-weight learning for rare classes. Once the model sees more data for a class, in-weight learning becomes more accurate than in-context learning, due to limitations in their proposed induction mechanism.

Compared to these models, we offer a higher-level explanation of the general phenomenon of transient structure in terms of principles governing the preference for one solution over another at different points in training. We study this explanation in the setting of in-context linear regression, but it is also applicable in other settings.

Explaining transient structure.

There have been several attempts to explain transient structure in more general terms. If the memorizing solution achieves lower loss than the transient generalizing solution, the ultimate preference for memorization is not surprising (Singh et al., 2024; Park et al., 2024). The question remains, why would a generalizing solution arise in the first place if it is not as accurate as the memorizing solution (Singh et al., 2024)?

Panwar et al. (2024) speculate that the initial emergence of the generalizing solution could be due to an inductive bias towards simplicity. However, this still leaves the question, given that a less-accurate generalizing solution does emerge, why would it then fade later in training (Singh et al., 2024)?

Our work integrates these two perspectives. Rather than prioritizing accuracy or simplicity, we postulate a tradeoff between accuracy and simplicity that evolves over training. This explains the emergence of a simpler, less accurate generalizing solution (ridge) and its subsequent replacement by a complex, more accurate memorizing solution (dMMSE).

Internal model selection in deep learning.

Recent work has studied the relevance of internal model selection in Bayesian inference to deep learning. Chen et al. (2023) showed that, when small autoencoders transition between different encoding schemes during training (Elhage et al., 2022), such transitions are consistent with Bayesian inference. Hoogland et al. (2024) and Wang et al. (2024) found that the same theory can be used to detect the formation of internal structure, such as induction circuits (Elhage et al., 2021; Olsson et al., 2022) in small language models. Ours is the first work to analyze a transition between two transformer solutions in detail from this perspective.

3 In-context linear regression

In this section, we introduce the in-context linear regression setting and the idealized dMMSE and ridge solutions, largely following Raventós et al. (2023).

3.1 Nested multi-task data distributions

Given a latent regression vector, or task, , we define a conditional distribution of sequences of i.i.d. pairs

where and . We set , , and .

We then define an unconditional data distribution of sequences , where is one of several task distributions described below. We sample a dataset of size , , by first sampling and then sampling for .

We define a task distribution for each task diversity as follows. We fix an unbounded i.i.d. sequence . For we define

We further define and . We denote by the data distribution formed from , and by a corresponding dataset.

Note that, in a departure from Raventós et al. (2023), the task sets are nested by construction. In particular, the root task is included at every , allowing us to compare all models by their behavior on .

3.2 Mean squared error objective

Given a sequence , denote by the context subsequence with label . Let be a function mapping contexts to predicted labels. Given a dataset we define the per-token empirical loss

| (1) |

Averaging over context lengths we obtain the empirical loss

| (2) |

The corresponding population loss is defined by taking the expectation over the data distribution ,

| (3) |

For a function implemented by a transformer with parameter , we denote the losses , , and . For task diversity we use a superscript .

3.3 Idealized in-context linear regression predictors

Given a context there are many possible algorithms that could be chosen to predict . Raventós et al. (2023) studied the following two predictors:

Predictor 1 (dMMSE).

For and , the discrete minimum mean squared error predictor, dMMSEM, is the function such that

| (4) |

where the dMMSEM task estimate is given by

Note that the dMMSEM task estimate and therefore the prediction explicitly depends on the tasks .

Predictor 2 (ridge).

For , the ridge predictor is given by

| (5) |

where if the task estimate is , otherwise the task estimate is given by -regularized least-squares regression on the examples in the context with the regularization parameter set to ,

where and .

Optimality of predictors.

Raventós et al. (2023) showed that for finite task diversity , given data distribution , the minimum mean squared error predictions are given by equation 4, that is, dMMSEM, whereas for infinite task diversity, given data distribution , the minimum mean squared error predictions are given by equation 5, that is, ridge.

Moreover, note that for a fixed context , we have that as , . It follows that ridge is an approximately optimal predictor for given a large finite task diversity . However, for all finite task diversities it remains possible to reduce expected loss on by specializing to the tasks in (at the cost of increased loss on sequences constructed from other tasks).

Consistency of predictors.

The task estimates are both asymptotically consistent assuming unbounded sequences drawn based on a realizable task . However, the task estimates will differ for all (due to the different priors, and ), particularly for early tokens and especially in the under-determined regime .

4 The transient ridge phenomenon

In this section, we replicate the transient ridge phenomenon observed by Panwar et al. (2024, §6.1) by training transformers at a range of task diversity parameters and evaluating their performance on out-of-distribution (OOD) sequences.

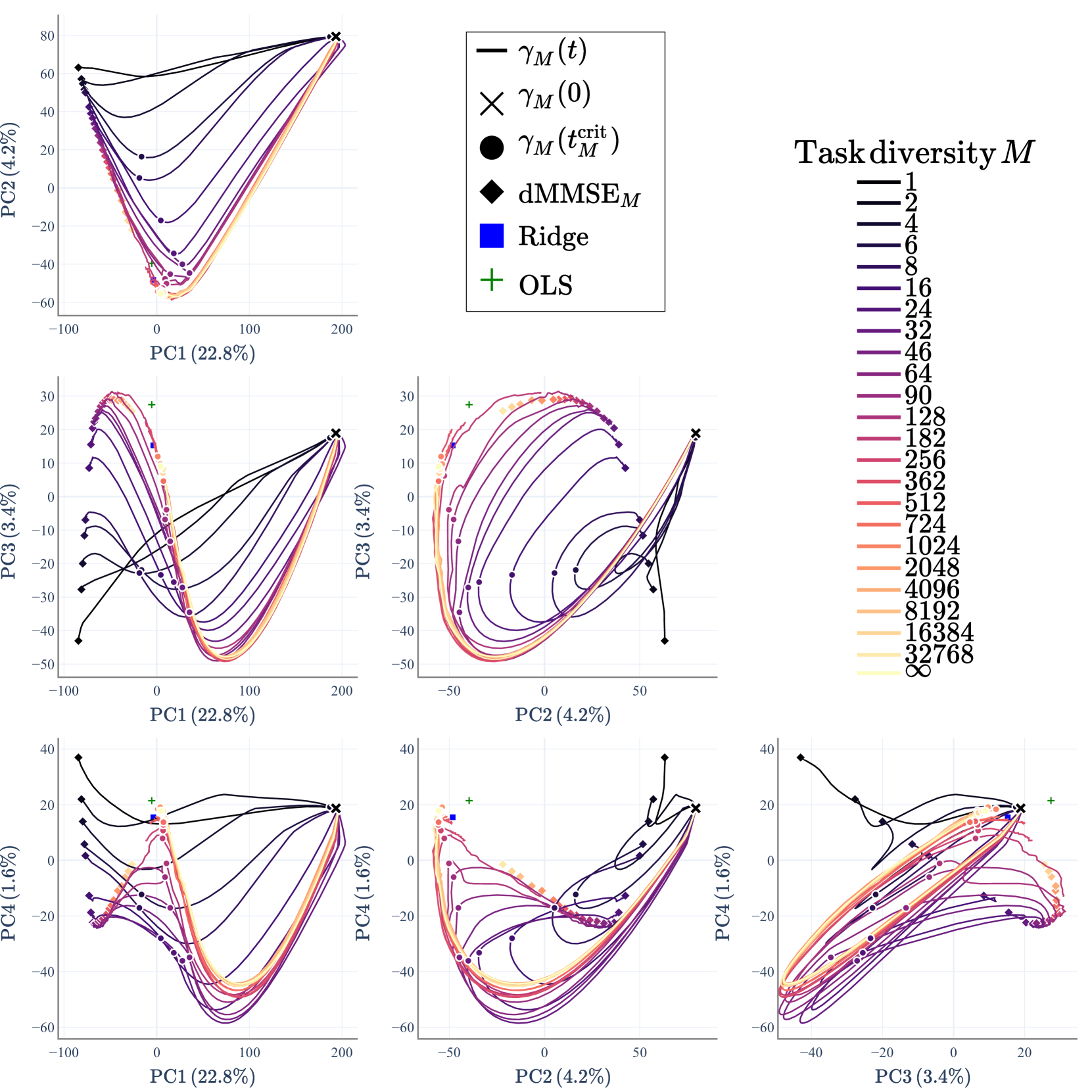

We then apply the general technique of joint trajectory PCA: We use principal component analysis (PCA) to decompose the collective function-space trajectories of the transformers, producing a low-dimensional representation of their behavioral development. Without having to specify the idealized predictors, we recover the difference between dMMSE and ridge as correlated to the second principal component, and show that in the lead up to the task diversity threshold trajectories are increasingly drawn towards ridge.

4.1 Transformer training

We train transformers on nested multi-task data distributions with varying task diversity (Section 3.1) under the mean squared error objective (Section 3.2) to see when they behaviorally approximate dMMSE or ridge (Section 3.3). We use a -layer transformer with million parameters (details in Appendix A; more architectures in Appendix I).

We train with each of a set of task diversities ranging from to and also including . Each run generates a trajectory through parameter space for training steps , from which we subsample checkpoints using a union of linear and logarithmic intervals (Section A.4). For notational ease, we sometimes denote the function as .

4.2 Joint trajectory principal component analysis

An established method for studying the development of structure and function in a system is to analyze its trajectory in configuration space. Amadei et al. (1993) developed the technique of applying PCA to such trajectories, called essential dynamics or simply trajectory PCA. It is argued that important features of trajectories appear in the essential subspace spanned by the leading principal components (Briggman et al., 2005; Ahrens et al., 2012), though interpreting PCA of time series requires care cf., Shinn, 2023; Antognini & Sohl-Dickstein, 2018; also Section C.1.

Trajectory PCA has seen diverse applications in molecular biology (Amadei et al., 1993; Meyer et al., 2006; Hayward & De Groot, 2008), neuroscience (Briggman et al., 2005; Cunningham & Yu, 2014), and deep learning (Olsson et al., 2022; Mao et al., 2024). We adapt a multi-trajectory variant (Briggman et al., 2005) to study the collective behavioral dynamics of our family of transformer models trained with different task diversities. In particular, we simultaneously perform PCA on the trajectories of all models through a finite-dimensional subspace of function space. Our detailed methodology for this joint trajectory PCA is as follows.

Joint encoding of transformer trajectories.

Given a parameter , we can view the transformer as mapping each sequence to a vector of predictions for its subsequences,

We fix a finite dataset of input sequences (recalling that the root task is shared by all task sets, so is the natural task to use to compare in-distribution behavior). We concatenate the outputs of for each input into one long row vector,

representing the function as a point in a finite-dimensional subspace of function space.

We apply this construction to each transformer checkpoint . For each , we aggregate the row vectors from each checkpoint into a matrix and then stack each vertically into :

Principal component analysis.

We apply PCA to the joint matrix . Supposing has been mean-centered, it has a singular value decomposition where has left singular vectors as columns, is a diagonal matrix of ordered positive singular values, and has right singular vectors as its columns. For , let denote the loading matrix given by the first columns of . The span of these columns forms the -dimensional (joint) essential subspace.

Projecting into the essential subspace.

The corresponding projection from feature space into the essential subspace is given by where . The developmental trajectory of each model is then represented as a curve defined by

For any principal component dimension we call the th component function a PC-over-time curve.

The dMMSEM and ridge predictors defined in equations 4 and 5 can likewise be encoded as row vectors and projected into the essential subspace. For let

Then each predictor projects to a single point in the essential subspace, . Note that we do not include the points , …, in the data prior to performing PCA.

4.3 Experimental results

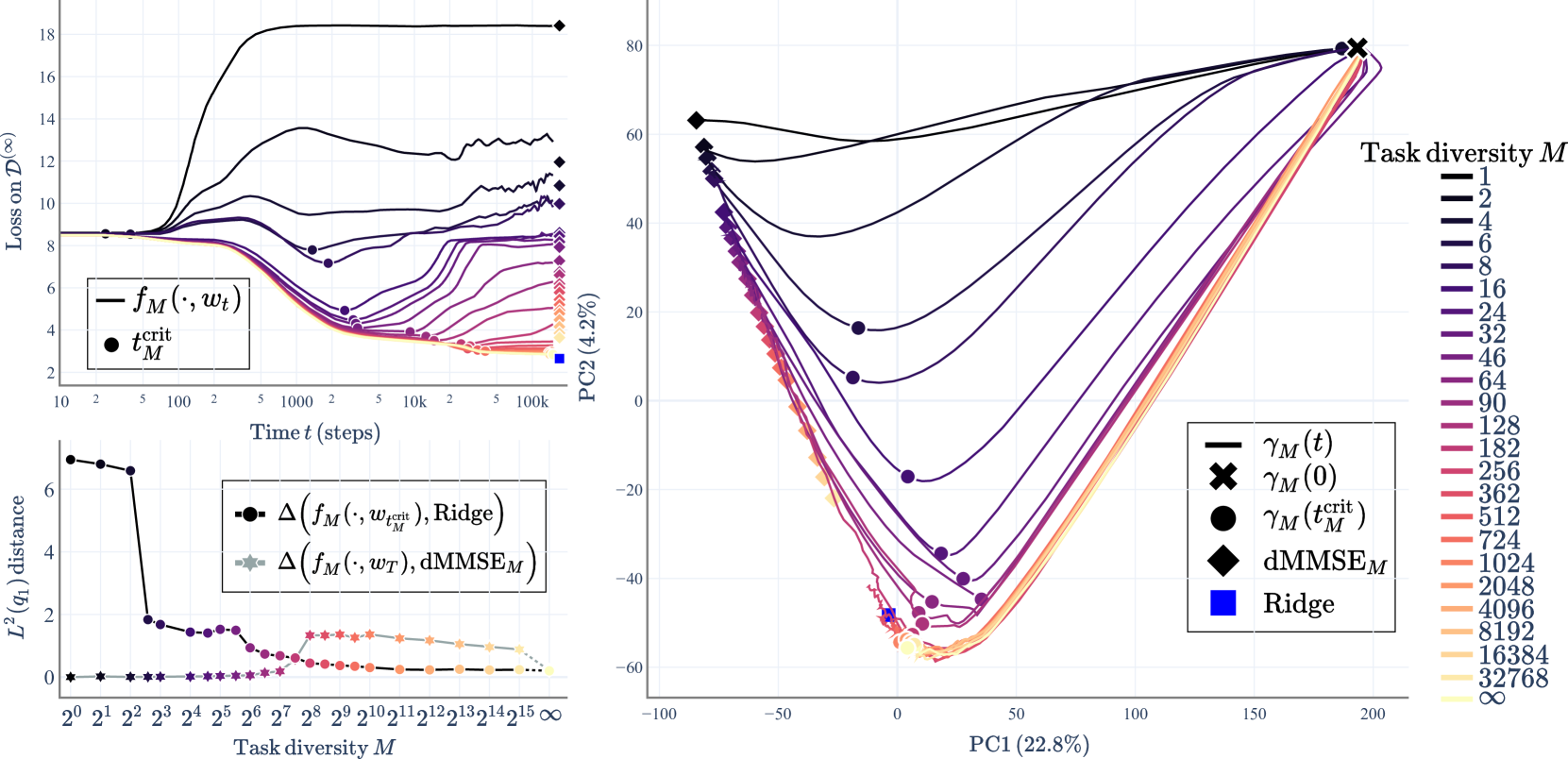

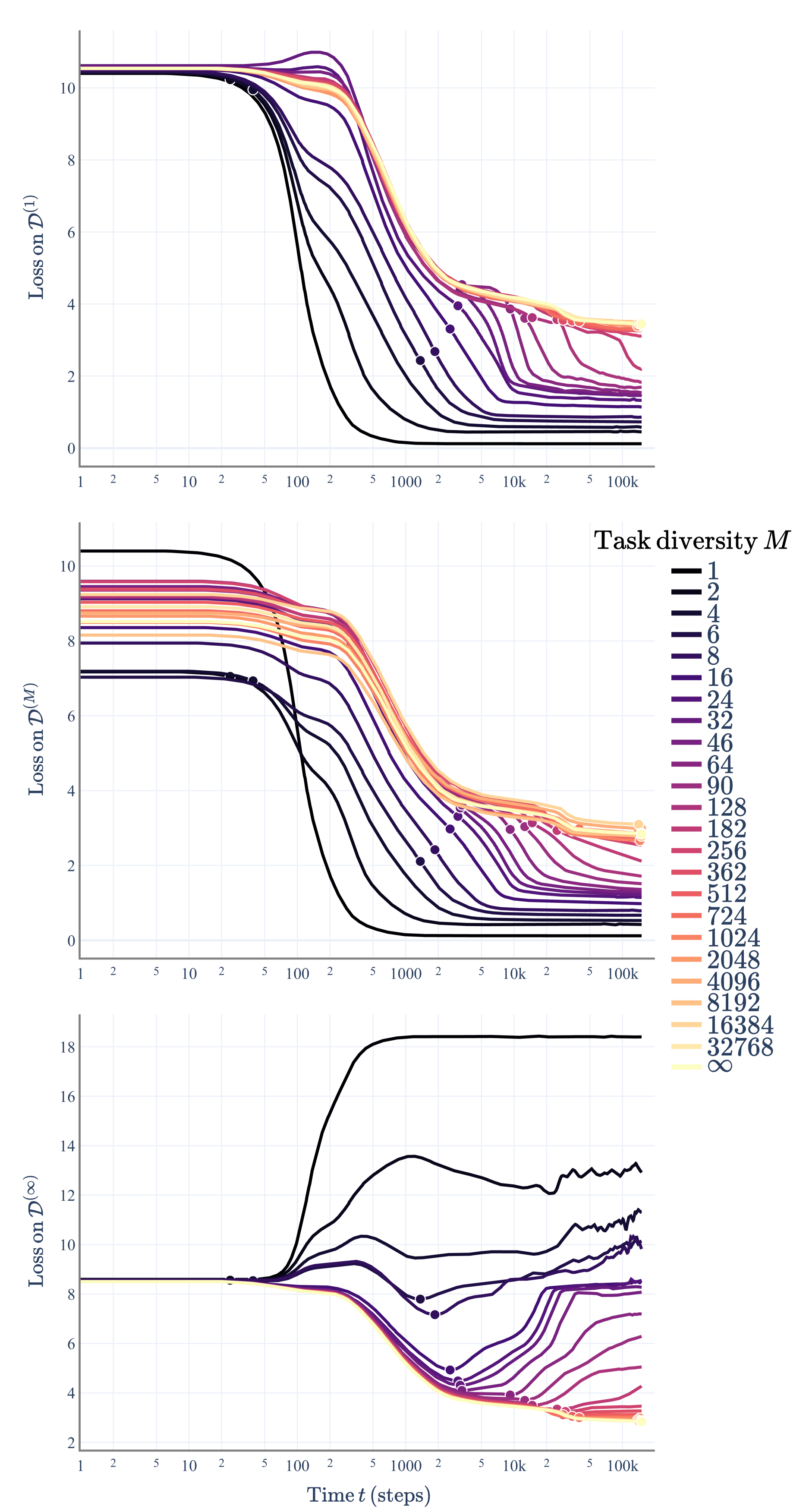

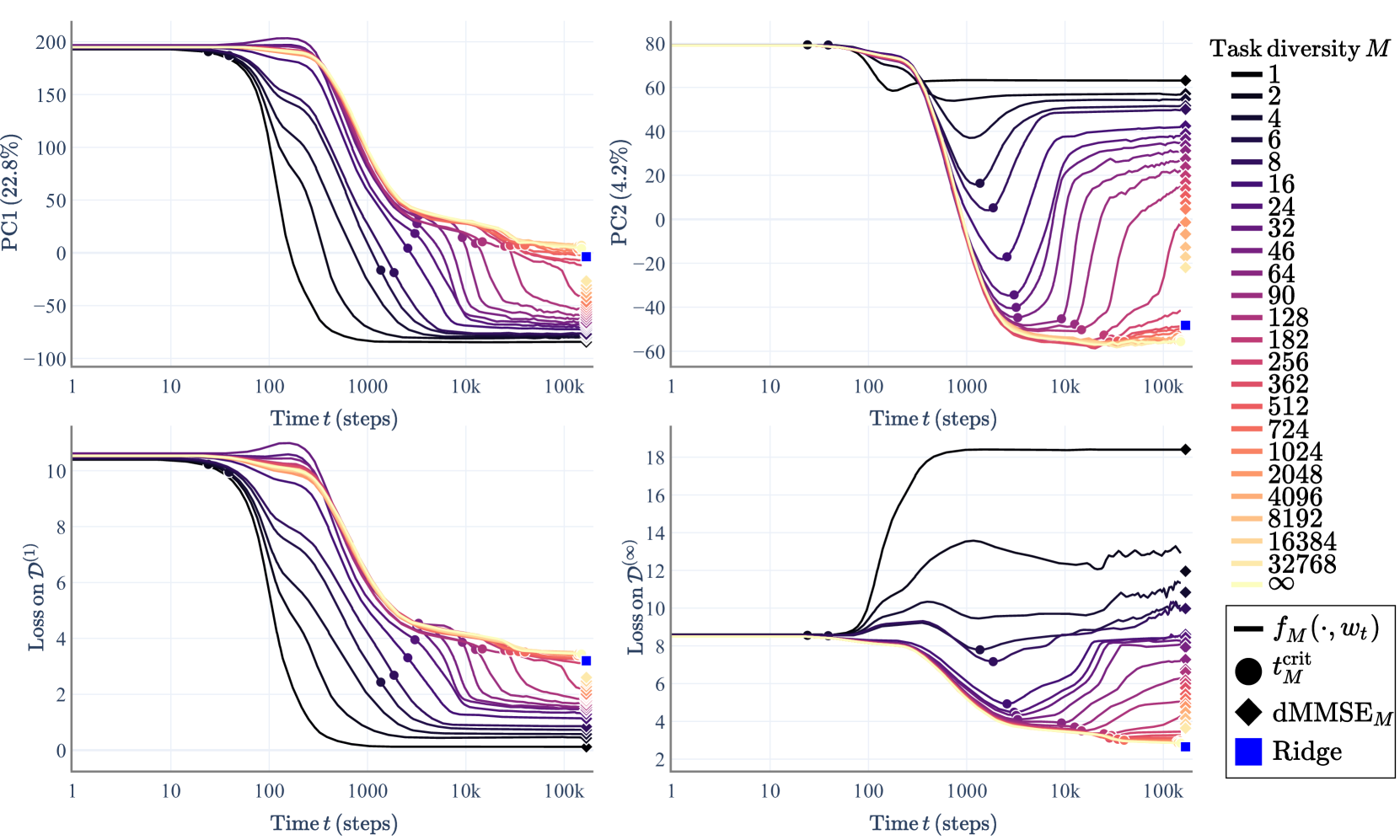

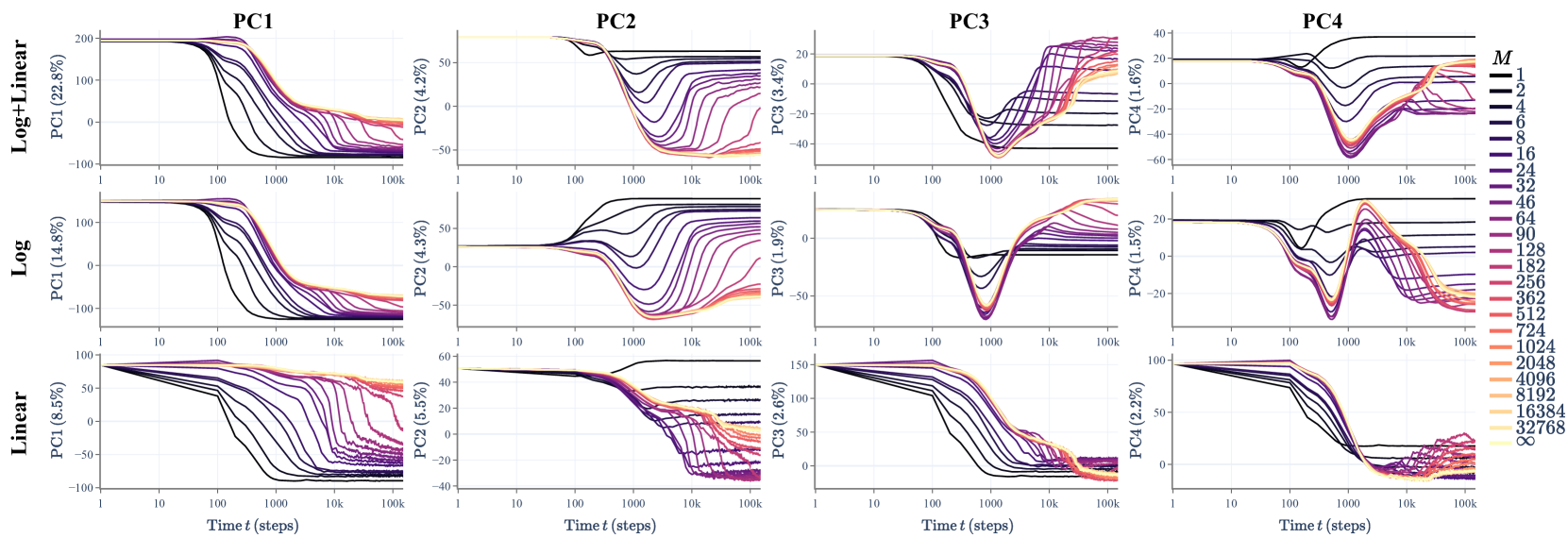

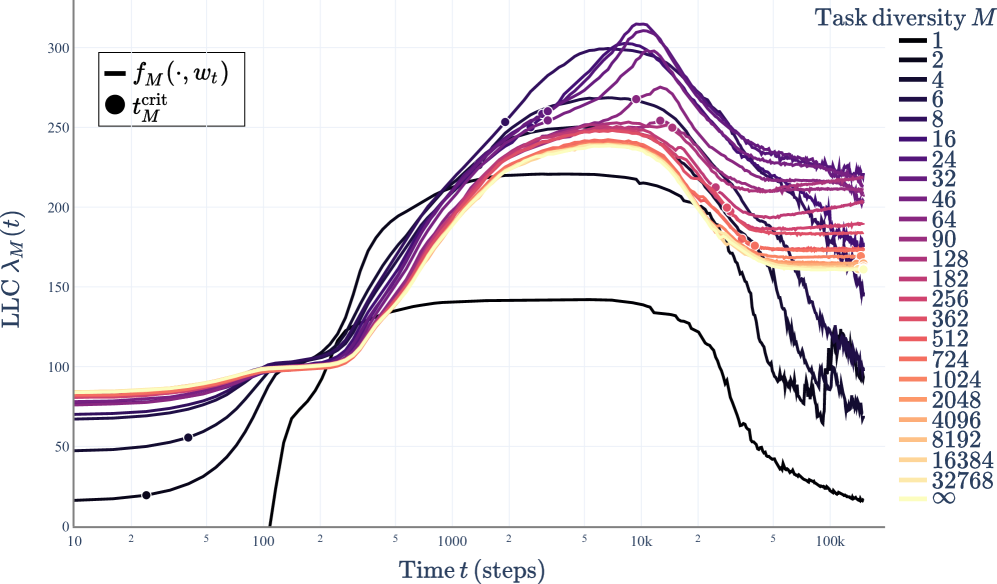

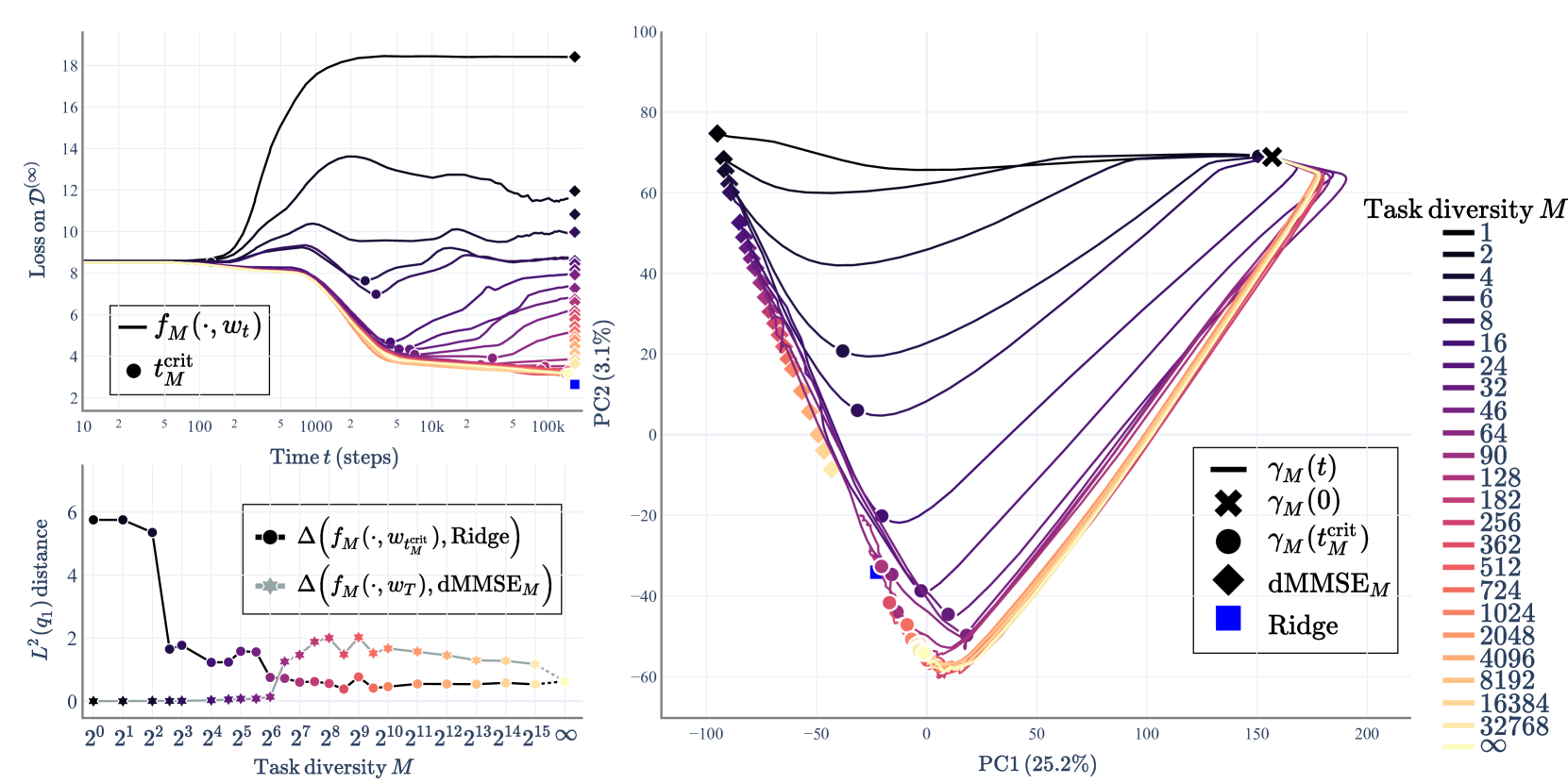

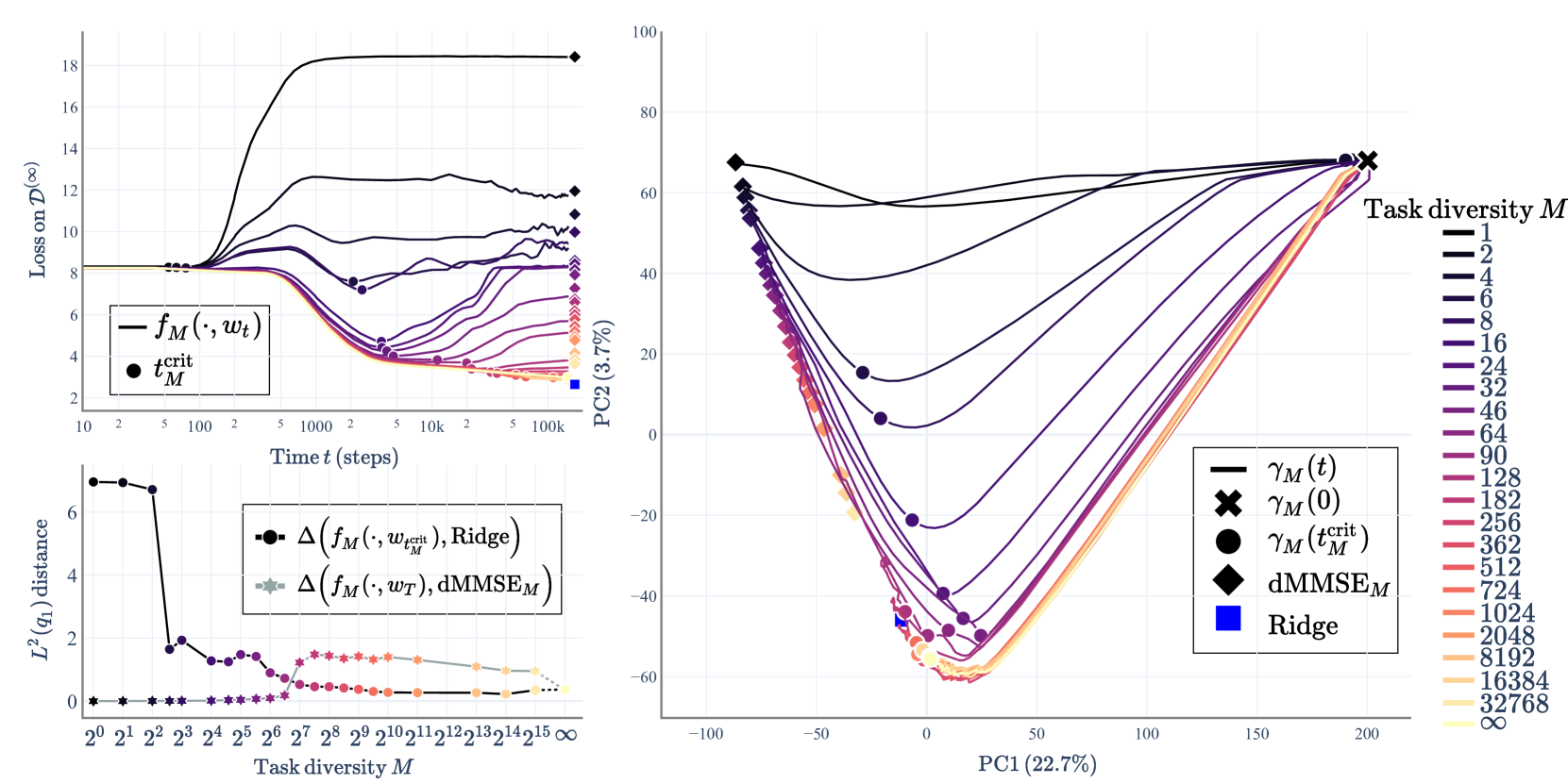

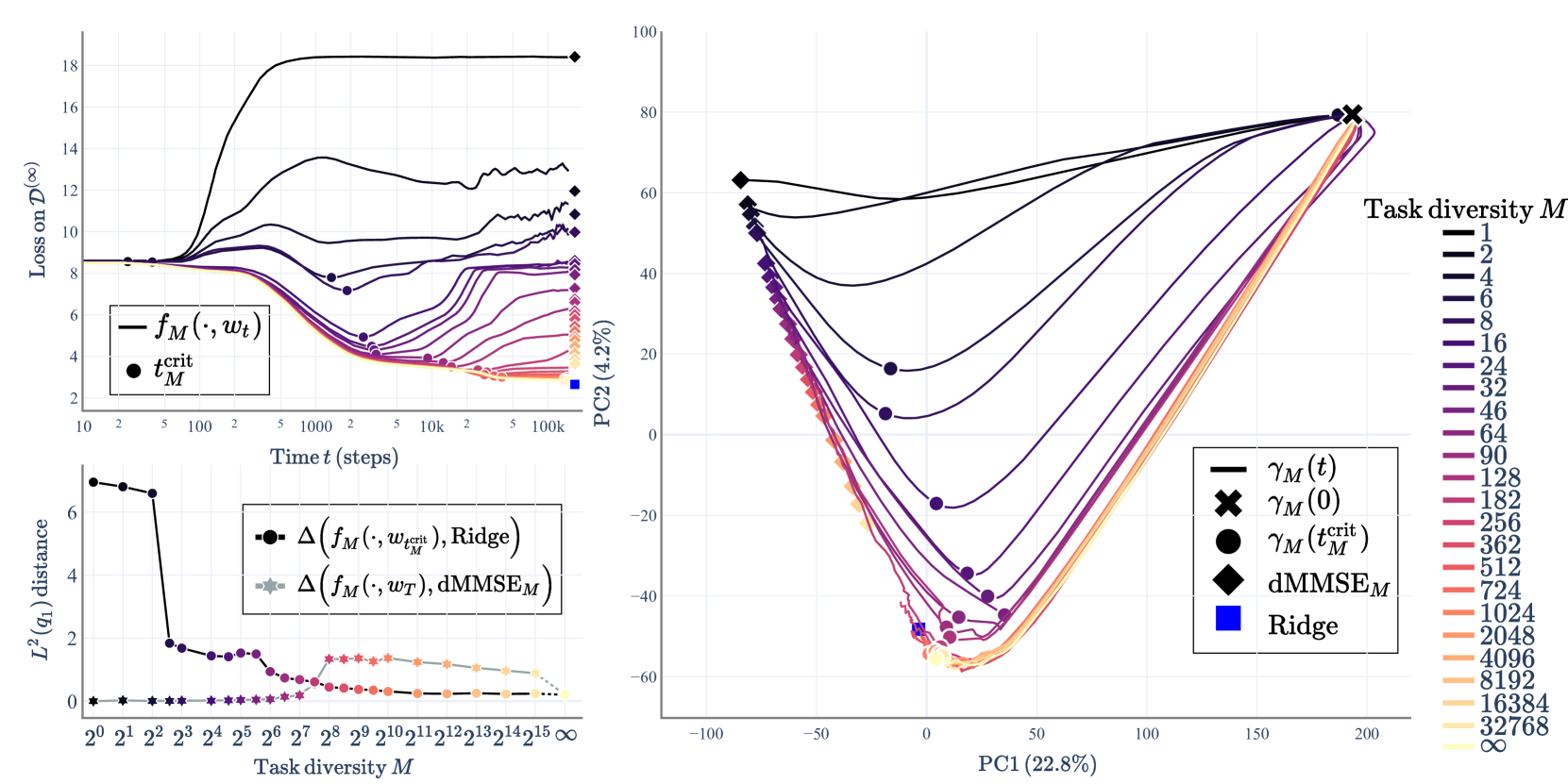

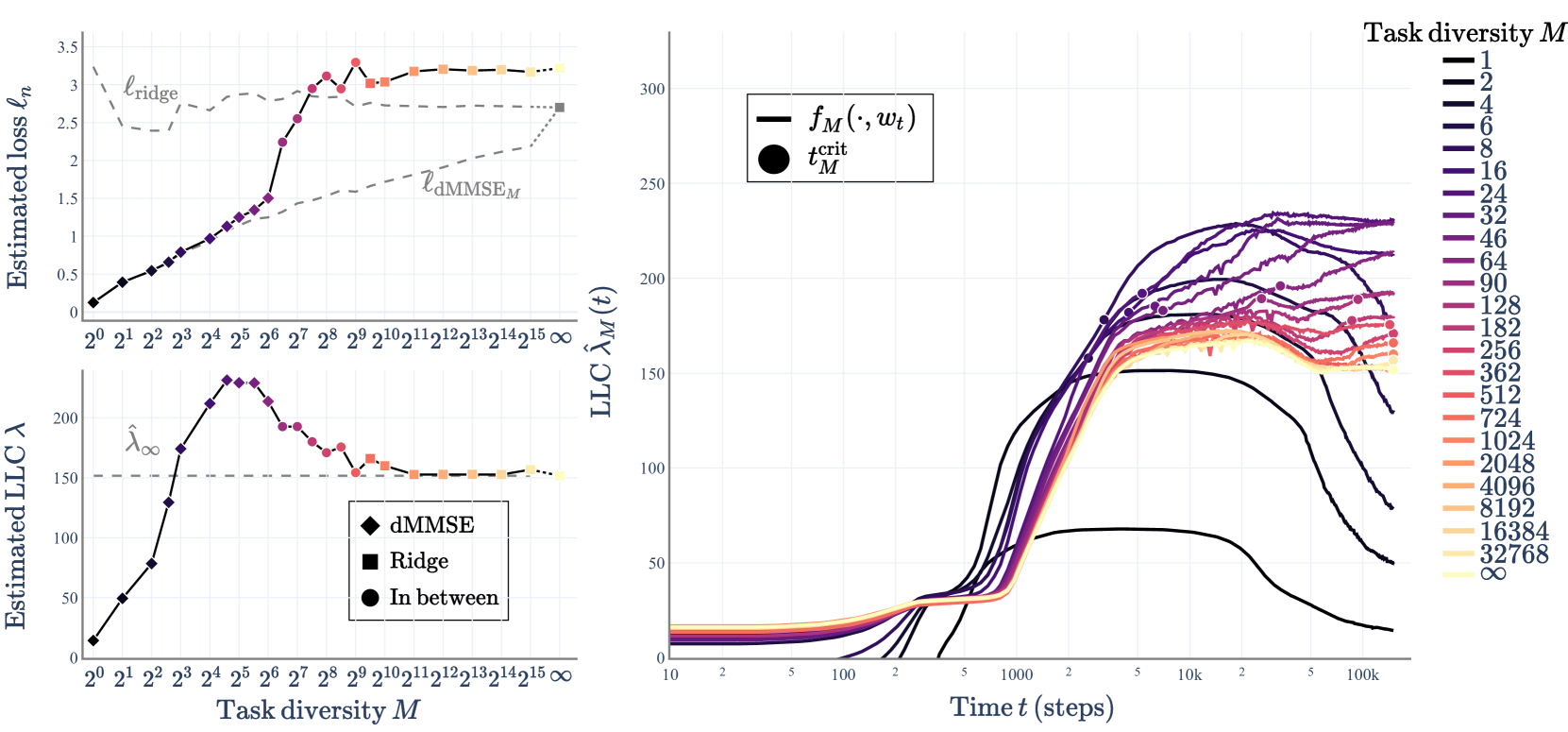

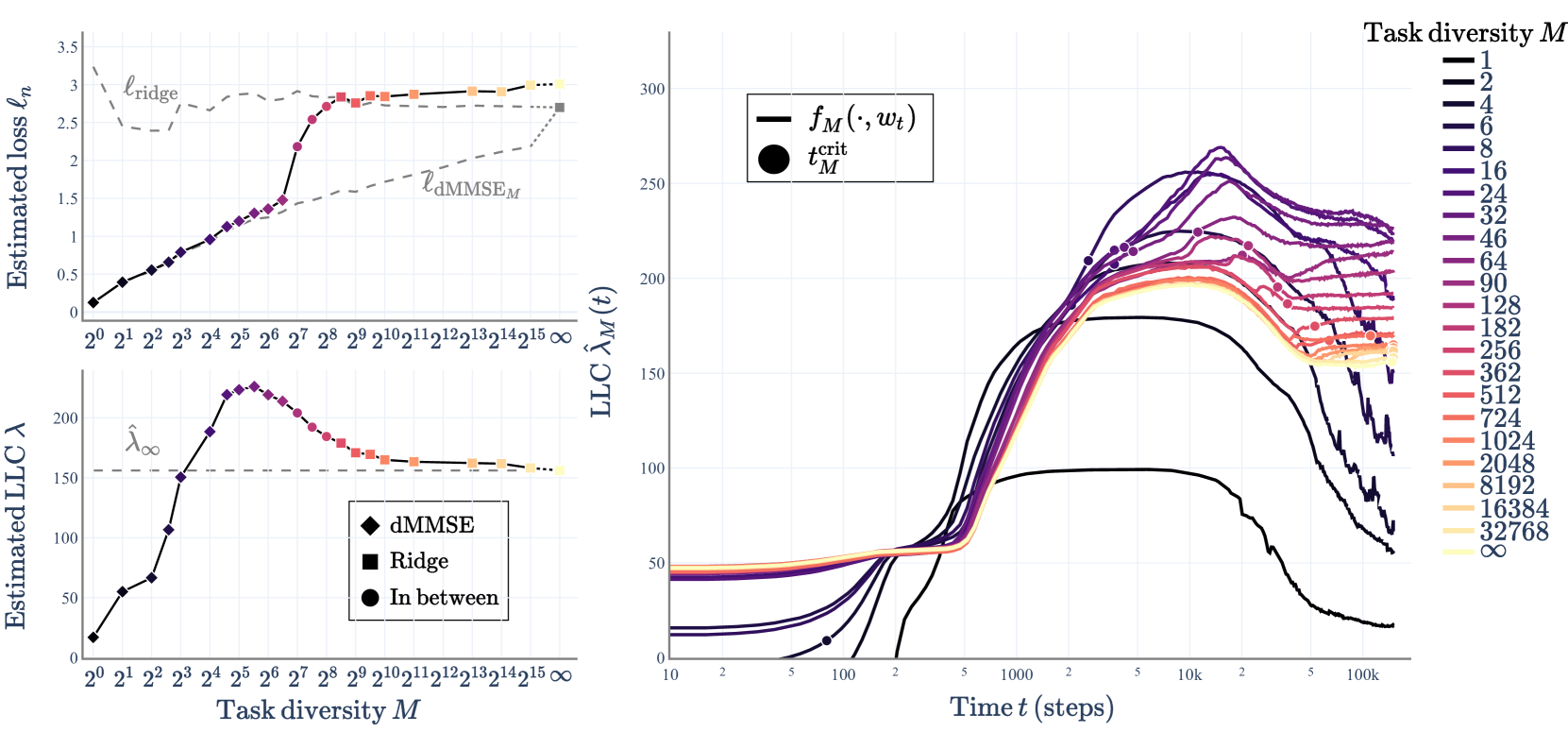

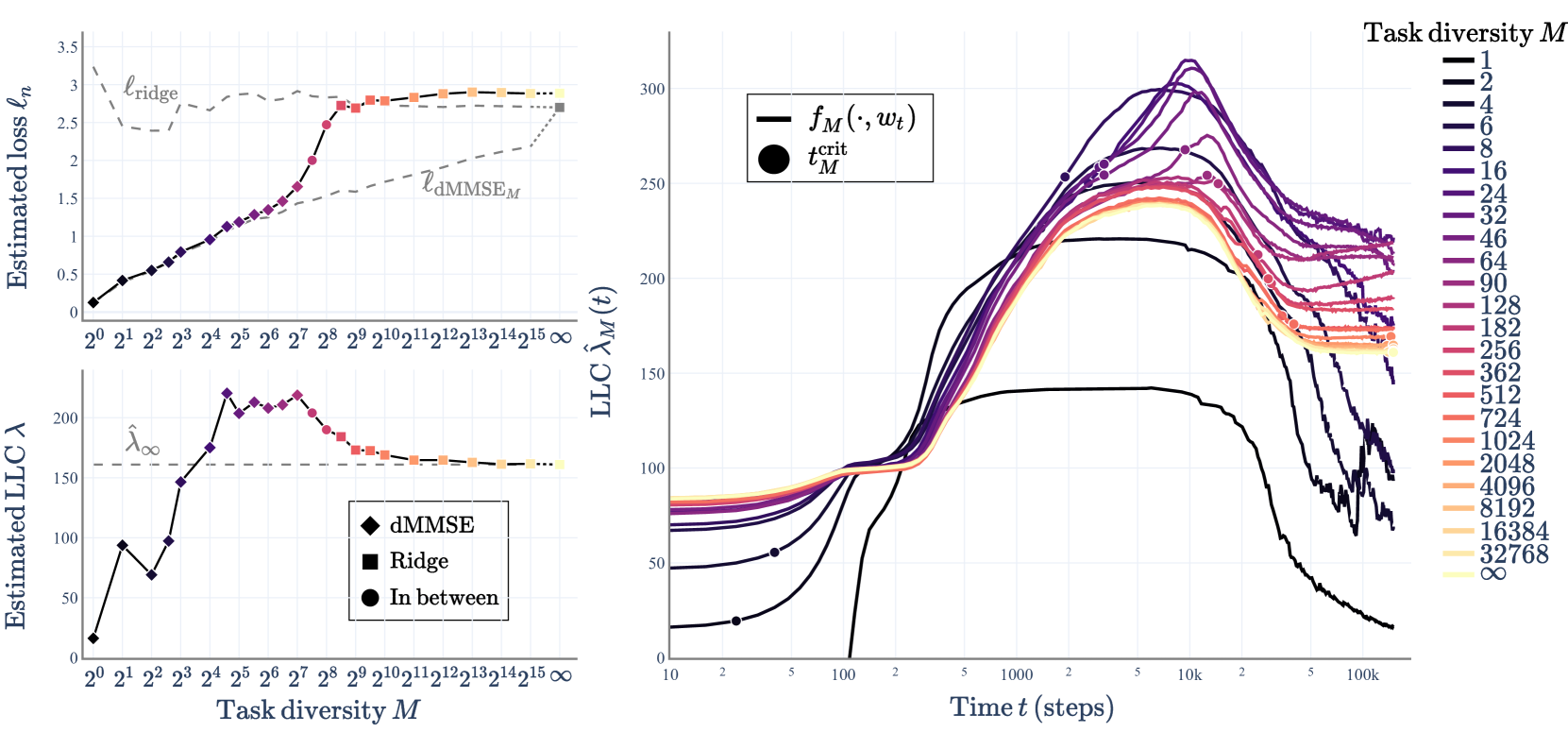

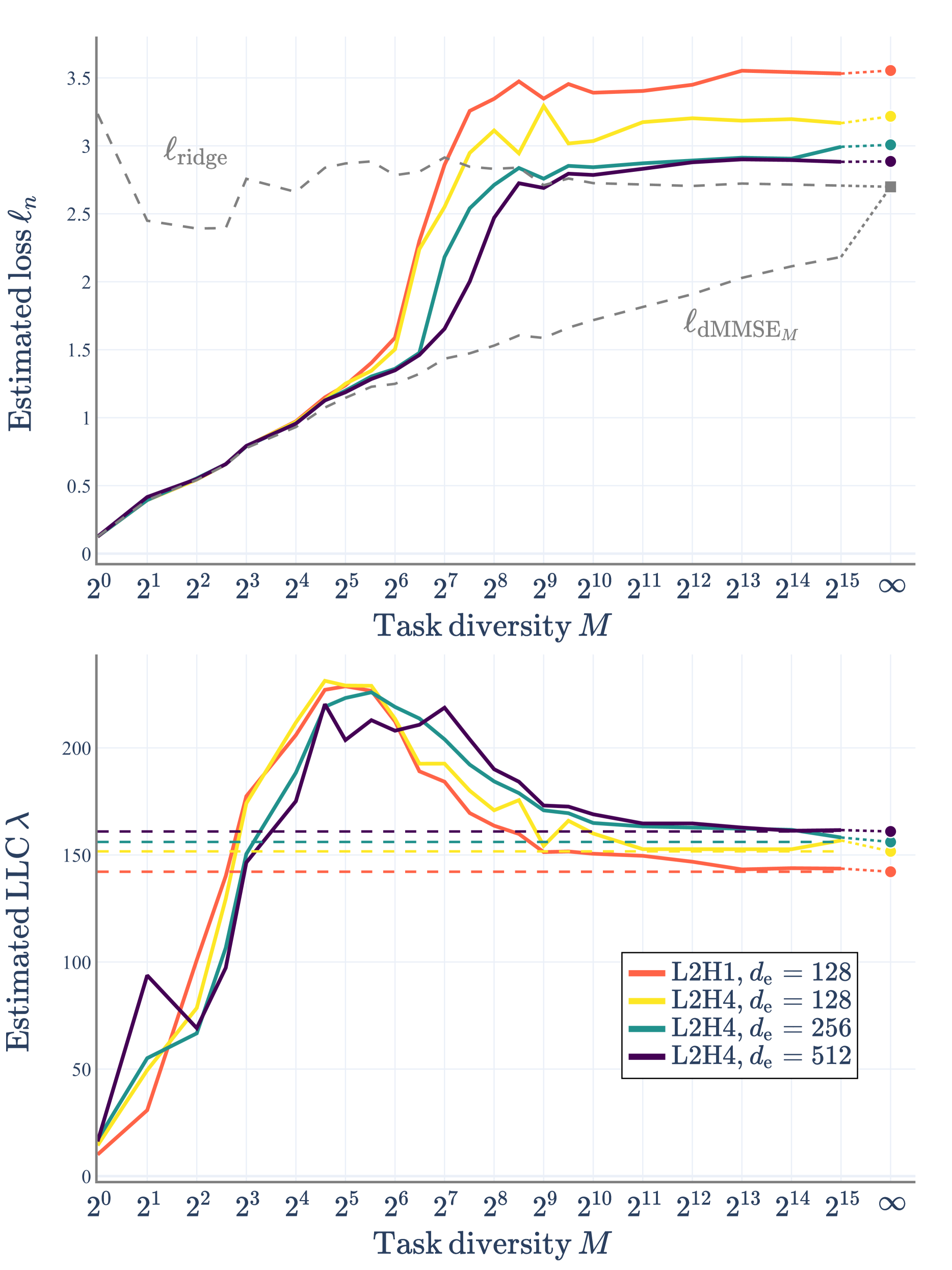

Figure 1 shows OOD loss on a fixed test set and the result of 2-dimensional joint trajectory PCA ( explained variance). Appendix B shows in-distribution loss. Appendix C extends to 4-dimensional PCA, explores the effect of checkpoint distributions, and shows that results are insensitive to the choice of batch size .

Essential subspace.

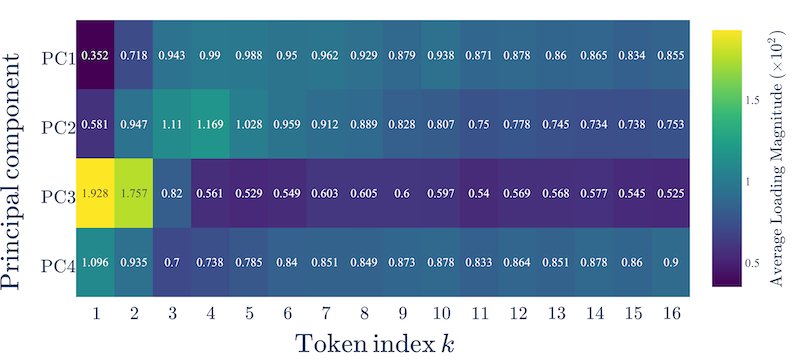

Strikingly, PC2 correlates with an axis of behavioral difference between dMMSEM (for increasing ) and ridge. Appendix E confirms that the predictions on earlier tokens, where dMMSE and ridge differ more, load more heavily on PC2 than those for later tokens do. PC2 also correlates with OOD loss, while PC1 appears to correlate with loss on and with a notion of “development time” (see Figure C.1 and Section C.2).

Task diversity threshold.

As in Raventós et al. (2023), at low task diversity (in our case ), fully-trained transformers behaviorally approximate dMMSEM, while above a task diversity threshold (), they converge to a point that behaviorally approximates ridge. Trajectories converge somewhere between.

Transient ridge.

Replicating Panwar et al. (2024, §6.1), we see that for intermediate in the lead-up to the task diversity threshold, the OOD loss is non-monotonic. For , loss decreases towards that of ridge, then increases to that of dMMSEM. We see a partial dip for and a partial rise for .

Trajectory PCA reveals that this non-monotonicity coincides with changes in the development of in-distribution behavior. For low , the transformers proceed directly to dMMSEM in the essential subspace. As increases (until the task diversity threshold), the trajectories are increasingly deflected from this straight path into one that transits via approximating ridge. Beyond the task diversity threshold, the trajectories proceed directly to ridge and do not depart.

This trajectory PCA result suggests that the presence of the approximate ridge solution in the optimization landscape is in some sense influencing the development of internal structures in the transformers. Moreover, as increases, as the dMMSEM solution changes, the strength of the influence of the ridge solution increases. In the next section, we attempt to understand the nature of this influence.

5 Evolving loss/complexity tradeoff

In this section, we model the transient ridge phenomenon as the result of the transformer navigating an evolving tradeoff between loss and complexity as it undergoes additional training. We draw on the theory of Bayesian internal model selection to qualitatively predict the nature of the tradeoff. We then empirically validate this model of the phenomenon by quantifying the complexity of the fully-trained transformers using the associated complexity measure.

5.1 Learning solutions of increasing complexity

The learning dynamics of many systems follow a pattern of progressing from solutions of high loss but low complexity to solutions of low loss but high complexity. This pattern has been studied in detail in certain models including deep linear networks (e.g., Baldi & Hornik, 1989; Saxe et al., 2019; Gissin et al., 2020; Jacot et al., 2021), multi-index models (Abbe et al., 2023), and image models (Kalimeris et al., 2019), each with their own notion of “complexity.”

Unfortunately, we lack results describing how such a progression should play out, or what complexity measure to use, for general deep learning. Therefore, we turn to singular learning theory (SLT; Watanabe, 2009; 2018)—a framework for studying statistical models with degenerate information geometry, including neural networks (Hagiwara et al., 1993; Watanabe, 2007; Wei et al., 2023)—in which a similar loss/complexity tradeoff has been studied in general terms in the setting of Bayesian inference.

5.2 Bayesian internal model selection

In Bayesian inference, SLT shows that the solutions around which the posterior concentrates are determined by a balance of loss and complexity. Moreover, the ideal balance changes as the number of samples increases, driving a progression from simple but inaccurate solutions to accurate but complex solutions Watanabe, 2009, §7.6; Chen et al., 2023. The leading-order complexity measure is the local learning coefficient (LLC; Lau et al., 2025), which can be understood as a degeneracy-aware effective parameter count. We outline this internal model selection principle below.

Bayesian posterior.

Consider a neural network parameter space . Let be a nonzero prior over and an empirical loss (the average negative log likelihood) on samples. Then the Bayesian posterior probability of a neighborhood given samples is

where is the marginal likelihood of ,

Bayesian posterior log-odds.

Consider two neighborhoods . The preference of the Bayesian posterior for over can be summarized in the posterior log-odds,

| (6) |

which is positive to the extent that prefers over .

Watanabe’s free energy formula.

SLT gives an asymptotic expansion of the Bayesian local free energy . Let be a solution, that is, a local minimum of the expected negative log likelihood, and let be a closed ball around , in which is a maximally degenerate global minimum. Then, under certain technical conditions on the model, we have the following asymptotic expansion in Watanabe, 2018, Theorem 11; Lau et al., 2025:

| (7) |

where is the LLC and the lower-order terms include various other contributions, such as from the prior.

The loss/complexity tradeoff.

Equation 8 describes an evolving tradeoff between loss and complexity as follows. Assume the lower-order terms from each free energy expansion cancel. Then if ( has higher loss than ) and ( has lower LLC than ), the sign of the log-odds depends on . The Bayesian posterior will prefer (around the simple but inaccurate solution) until , after which it will prefer (around the accurate but complex solution).

From Bayesian inference to deep learning.

Neural networks are typically trained by stochastic gradient-based optimization, not Bayesian inference. Nevertheless, as described in Section 2, recent work suggests that some qualitatively similar evolving tradeoff governs the development of structure in deep learning over training time (Chen et al., 2023; Hoogland et al., 2024; Wang et al., 2024).

This suggests that some as-yet-unknown principle of “dynamic111 In the sense of nonlinear dynamics (cf., e.g., Strogatz, 1994), where it is well-established that degeneracy in the geometry of critical points of a governing potential influences system trajectories. internal model selection”—in which the loss and the LLC play leading roles—underpins the structure of the optimization landscape, in turn influencing the trajectories followed by stochastic gradient-based optimization. Based on this motivation, we apply equation 8 to qualitatively predict the transient ridge phenomenon in terms of the differences in loss and LLC of the transformers that approximately implement the dMMSEM and ridge predictors.

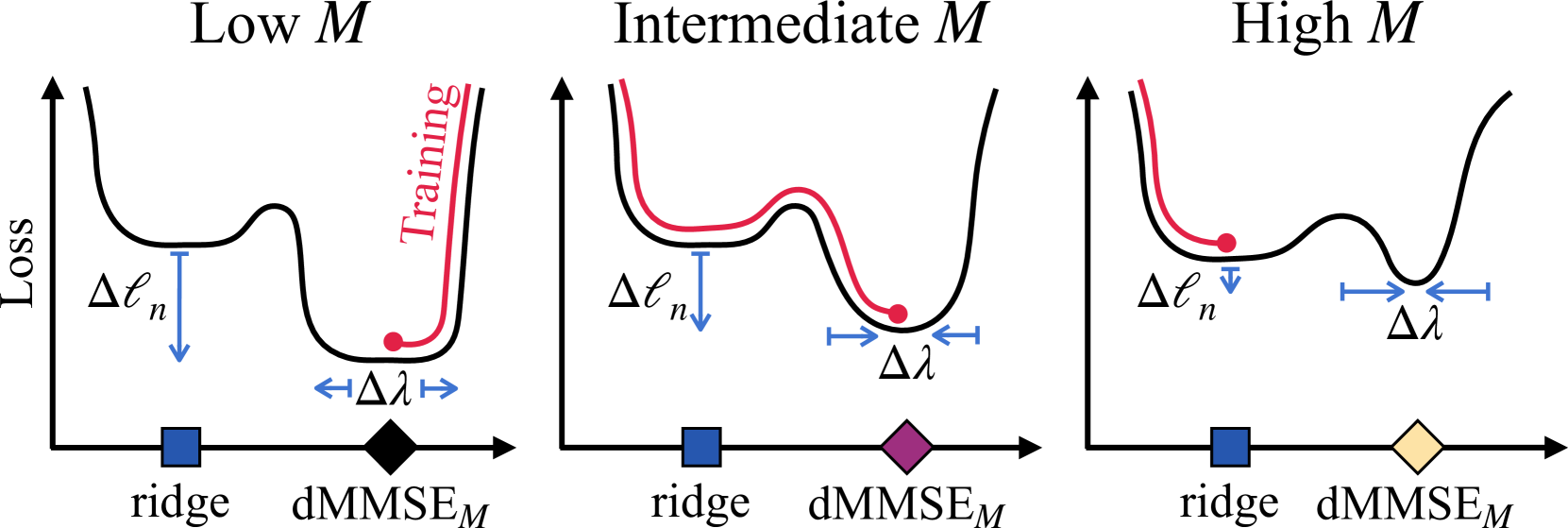

5.3 Explaining the transient ridge phenomenon

We offer the following explanation for the dynamics of transformers trained on in-context linear regression data with task diversity . Let and be transformer parameters approximately implementing ridge and dMMSEM respectively, along with their neighborhoods.

-

Low : We expect to have much lower loss and LLC than . As equation 8 never favors , training should proceed directly to .

-

Intermediate : We expect to have lower loss but higher LLC than . As equation 8 initially favors but eventually favors , training should proceed first towards before pivoting towards (after a number of training steps that increases with ).

-

High : We expect to have slightly lower loss but much higher LLC than . As equation 8 only favors at very high , trajectories should proceed to and should not depart by the end of training.

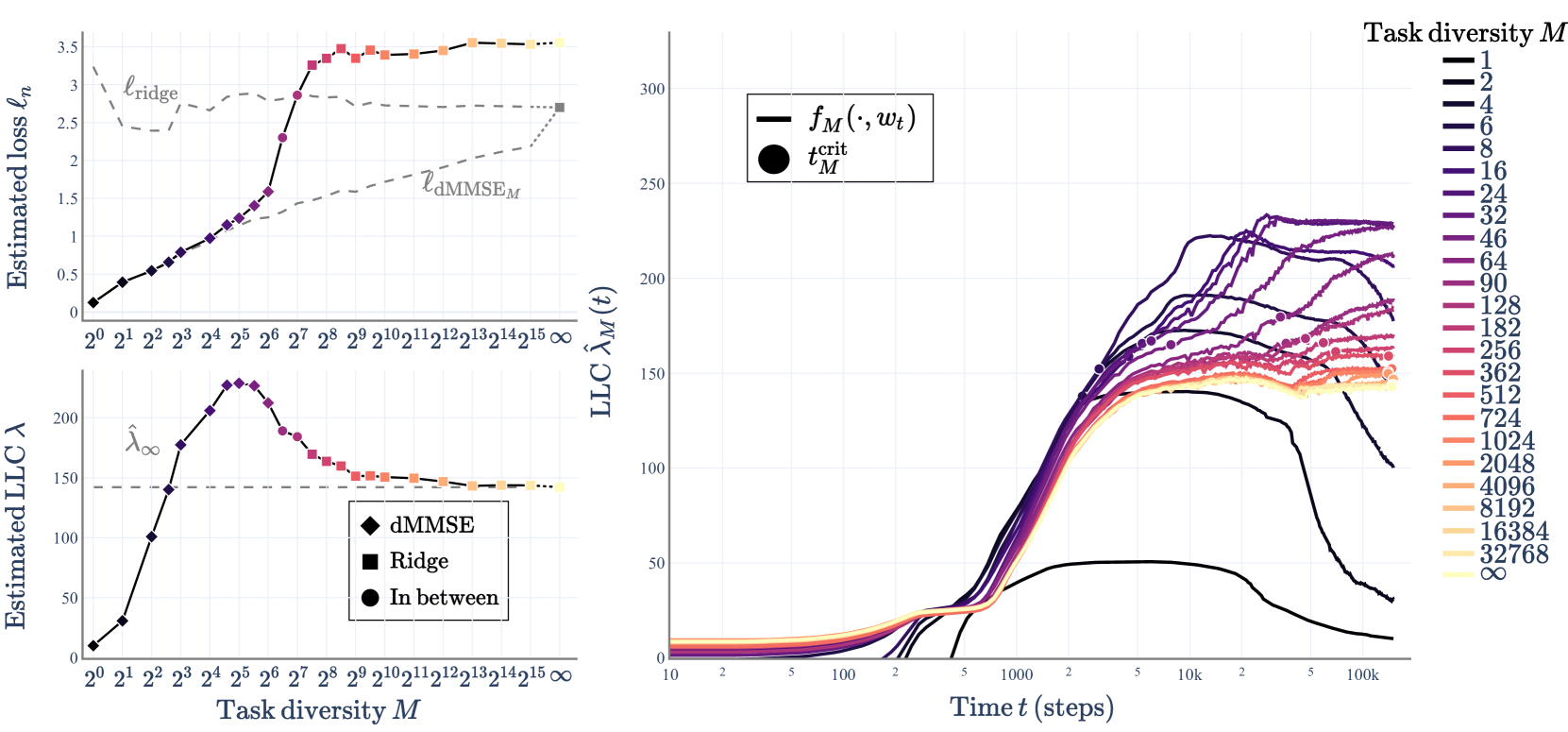

See Figure 2 for a conceptual illustration. For values that fall between these three prototypical cases, the posterior preference is less sharp. Therefore we expect to see gradual shifts the dynamics over the range of values.

5.4 Empirical validation of the explanation

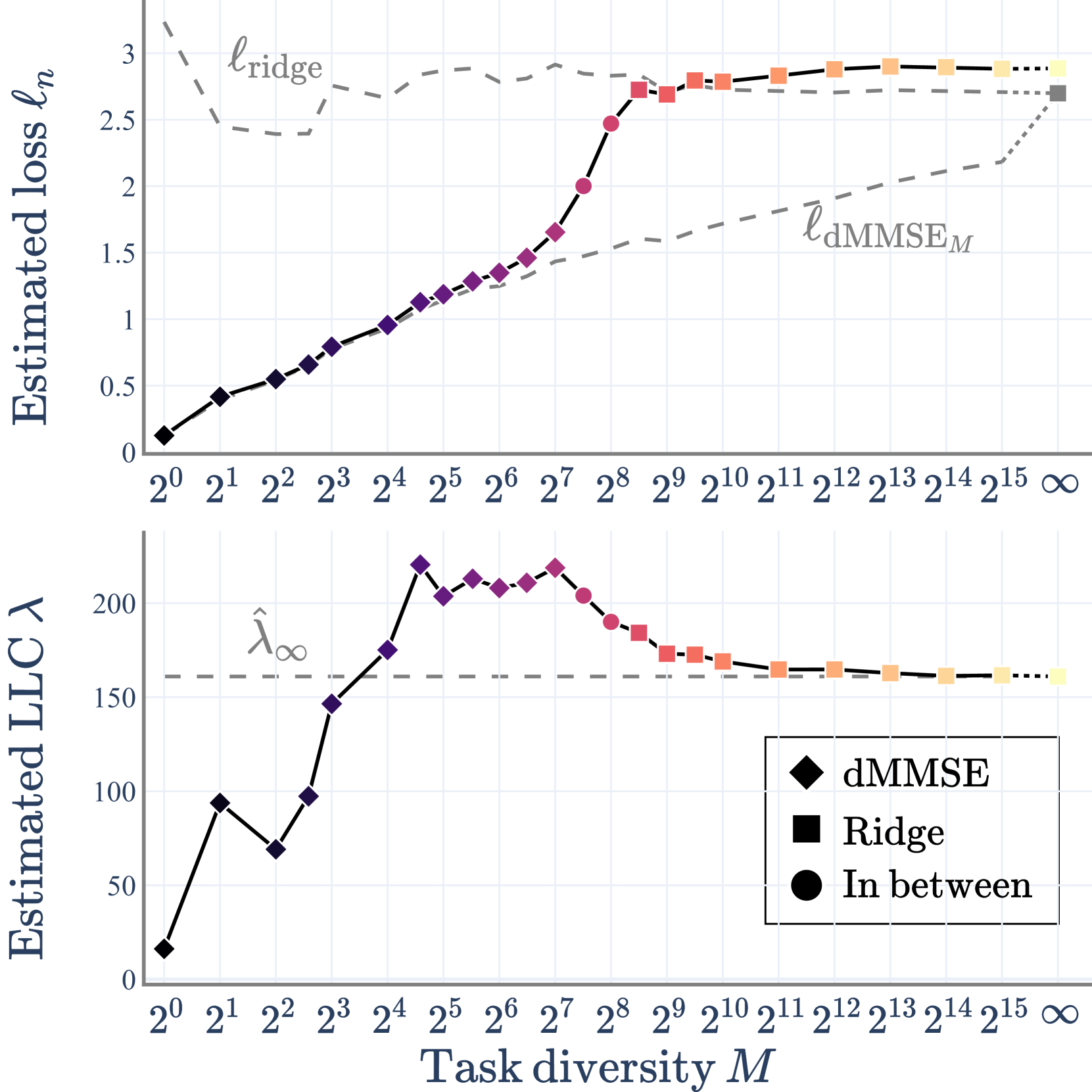

The above explanation is consistent with the findings of Section 4. It remains to validate that the trends in the loss and LLC are as expected. In this section, we outline our experiments estimating the loss and LLC of and .

Estimating loss.

We first estimate the loss of and by directly evaluating the idealized predictors 4 and 5. Alternatively, noting that the transformer cannot necessarily realize these predictors, we evaluate the end-of-training parameters (representing for low or for high ). Figure 3(top) confirms that the loss gap between idealized predictors shrinks with increasing , and the transformers achieve similar loss to their respective algorithms.

Estimating LLC.

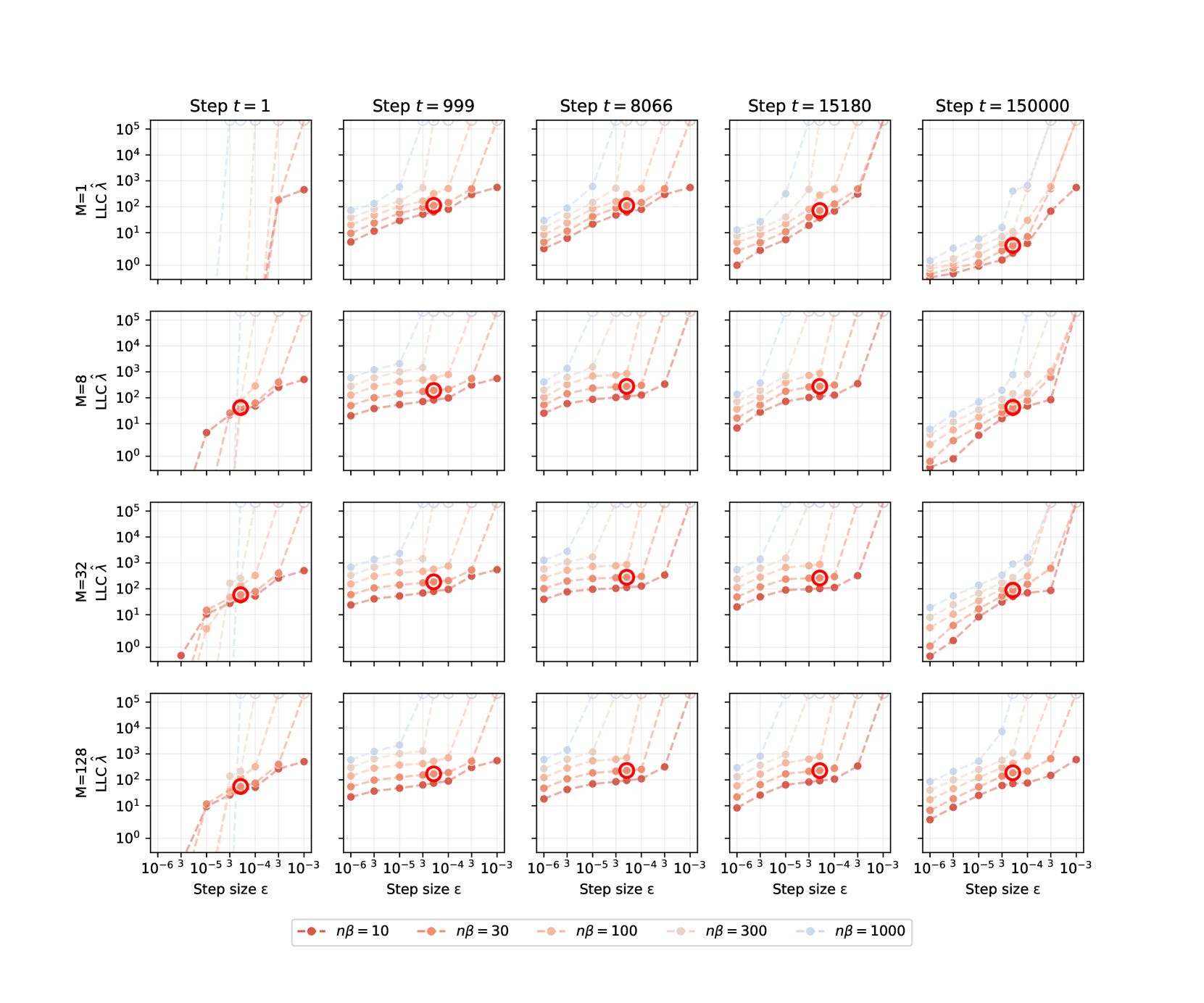

The LLC is architecture-dependent, so we can’t meaningfully measure the LLC of the idealized predictors, only our fully-trained transformers (representing for low or for high ). Following Lau et al. (2025), we estimate the LLC of a parameter as the average increase in empirical loss for nearby parameters,

| (9) |

where is a sample size, is an inverse temperature, is a localization strength parameter, and is expectation over the localized Gibbs posterior

We sample from this posterior with stochastic gradient Langevin dynamics (SGLD; Welling & Teh, 2011). Appendix F gives further details on LLC estimation, sampling with SGLD, and hyperparameter calibration.

Figure 3(bottom) shows LLC estimates for fully-trained transformers. High- LLCs converge to , which we take as , the LLC of the ridge solution. For low- transformers that converge to dMMSEM, we take as . As expected, this LLC increases with , and crosses during the onset of transience. Surprisingly, the estimated LLC of dMMSEM plateaus above , suggesting that the approximation achieved by the fully-trained transformers may be incomplete.

6 Limitations and future work

Our findings support an understanding of the transient ridge phenomenon as driven by an evolving loss/complexity tradeoff, governed by principles that are yet to be fully discovered but qualitatively resemble Bayesian internal model selection. In this section, we enumerate remaining gaps in this understanding, representing future steps towards a comprehensive understanding of neural network development.

6.1 Transformers versus idealized predictors

Our analysis is based primarily on in-distribution behavior, and it is not clear that our transformers can or do faithfully approximate the idealized predictors for all input sequences. Moreover, it is unclear whether the solutions governing training dynamics are necessarily the parameters to which transformers converge (we consider an alternative interpretation in Appendix G). Future work could seek a more detailed understanding of transformer solutions arising in practice, for example using mechanistic interpretability.

6.2 The role of lower-order terms

In Section 5.2, we make the simplifying assumption that the lower-order terms from each expansion cancel. However if these terms are not equal then their difference enters the posterior log odds, influencing the evolution of the posterior, especially for low . SLT has studied these terms (cf., e.g., Lau et al., 2025), but they are not as well-studied as the LLC. Future work could deepen our theoretical understanding of these lower-order terms or our empirical understanding of their role in internal model selection.

6.3 Dynamic versus Bayesian internal model selection

Of course, our primary motivation is to study neural network development, rather than Bayesian internal model selection per se. While we have contributed further evidence that the loss and the LLC play a leading role in a principle of “dynamic internal model selection” that governs neural network development, the precise form of this principle and the precise roles of the loss and the LLC remain to be determined. Our work highlights this as a promising direction for future empirical and theoretical work.

6.4 Why does transience stop?

Bayesian internal model selection suggests that the ridge solution should always eventually give way to a more complex but more accurate dMMSEM solution. In practice, replicating Raventós et al. (2023), we see a clear task diversity threshold above which transformers never leave ridge. This could be due to capacity constraints, under-training, neuroplasticity loss, or a concrete difference between Bayesian and “dynamic” internal model selection. Appendix H offers a preliminary analysis, but reaches no firm conclusion, leaving this an open question for future work.

6.5 Beyond in-context linear regression

As outlined in Section 2, the phenomenon of a transient generalizing solution giving way to a memorizing solution over training has now been observed in a range of sequence modeling settings beyond our setting of in-context linear regression Singh et al., 2024; Hoogland et al., 2024; Edelman et al., 2024; He et al., 2024; Park et al., 2024. There’s also the reverse phenomenon of a transition from a memorizing solution to an equal-loss but simpler generalizing solution (“grokking;” Power et al., 2022; Nanda et al., 2023).

These settings represent rich subjects for future empirical work investigating the principles governing the development neural networks. In the case of grokking, we note that the particular loss/complexity tradeoff outlined in Section 5 does not account for transitions that decrease complexity, though such transitions can be described within the Bayesian internal model selection framework by taking lower-order terms into account (cf. Section 6.2).

7 Conclusion

This paper contributes an in-depth study of the training dynamics of transformers in the settings of in-context linear regression with variable task diversity. We adapt the technique of trajectory principal component analysis from molecular biology and neuroscience and deploy it to expand our empirical understanding of the developmental dynamics of our transformers, and the variation in these dynamics with task diversity, revealing the choice between memorization and generalization as a principal axis of development.

Moreover, we adopt the perspective of singular learning theory to offer an explanation of these dynamics as an evolving tradeoff between loss and complexity (as measured by the local learning coefficient), finding evidence that these elements play a leading role in governing the development of our transformers, akin to their role in governing the development of the posterior in Bayesian internal model selection. These findings open the door to future research aiming to uncover the true principles governing the development of internal structure in deep learning.

Appendix

[appendix] [appendix]l1

Appendix A Transformer training details

A.1 Architecture

We use the same in-context linear regression transformer architecture as Hoogland et al. (2024)—a two-layer transformer modeled after NanoGPT Karpathy, 2022; see also Phuong & Hutter, 2022 and Raventós et al. (2023) (but with fewer layers). In more detail, the architecture is a pre-layer-norm decoder-only transformer with a learnable positional embedding. For the primary architecture used in the main body and related appendices, we set layers, attention heads per layer, embedding dimensions and MLP dimensions, yielding a transformer with the aforementioned million trainable parameters. Further details are given in Table 1.

A.2 Tokenization

To run sequences through the transformer and produce a sequence of predicted labels for each subsequence requires an initial encoding or “tokenization” step and a final “projection” step.

-

The sequence is first encoded as a sequence of tokens in using the tokenization function :

Note that this tokenization includes the final even though this is never used as part of the context for a prediction (it is used only as a label).

-

The transformer architecture takes a token sequence in as input and outputs a vector of the same shape. To extract the predictions for each , we read out the first component of every other token using a projection function ,

We use these extracted predictions in computing the loss as per Section 3. Note that transformer’s outputs for dimensions that are not extracted by are not subject to training (nor are they subject to evaluation).

Evaluating the loss of the transformer on all masked context predictions and not just the final token is an important design choice for our results. Given a sufficiently large context, many regression algorithms will arrive at the same prediction on late tokens. By training and then tracking the transformer’s early token predictions too, its functional outputs are more representative of its internal structure, giving a richer picture of their essential dynamics with the joint trajectory PCA (see also Appendix E).

A.3 Training

We train each transformer for k steps. We employ a learning rate scheduler that increases linearly from 0 to over the first k steps and remains constant thereafter. We train without explicit regularization and use the Adam optimizer (Kingma & Ba, 2014), with batch-size . Each training run took 3–4 TPU hours with TPUs provided by Google TPU Research Cloud. Further details are given in Table 1.

Each model was initialized at the same point in parameter space, for each , drawn once according to default settings in PyTorch. All models were trained to convergence, except for whose loss was still decreasing very slowly at the end of training. (We used a fixed training duration across all models for practical comparison).

We note that Raventós et al. (2023) used , meaning we train with a larger batch size but for fewer steps. However, the total number of training samples (153M) is comparable to their setting (128M).

A.4 Checkpoint distribution

We sample checkpoint indices , where . In particular, we use a combination of linear and log spaced checkpoints, . Setting k and we define

This set comprises 1,500 checkpoints spaced linearly at 100-step intervals, with the remaining checkpoints logarithmically spaced.

This sampling strategy allows us to observe both early-stage rapid changes and later-stage gradual developments throughout the training process. We investigate the effect of combining log and linear steps on trajectory PCA in Section C.4.

| Hyperparameter | Category | Description/Notes | Values |

|---|---|---|---|

| Data | Total # of training samples | ||

| Data | Batch size during training | 1024 | |

| Data | # of training steps | ||

| Data | Dimension of linear regression task (task size) | 8 | |

| Data | Maximum in-context examples | 16 | |

| Data | Variance of noise in data generation | ||

| Model | # of layers in the model | 2 | |

| Model | # of attention heads per layer | 4 | |

| Model | Size of the hidden layer in MLP | 512 | |

| Model | Embedding size | 512 | |

| Misc | Training run seed | 1 | |

| Optimizer Type | Optimizer | Type of optimizer | Adam |

| Optimizer | Maximum learning rate | 0.003 | |

| Optimizer | Weight Decay | 0 | |

| Optimizer | Betas | (0.9, 0.999) | |

| Scheduler Type | Scheduler | Type of learning rate scheduler | Increase then constant |

| Strategy | Scheduler | Strategy for annealing the learning rate | Linear |

| % start | Scheduler | Percentage of the cycle when learning rate is increasing |

Appendix B Transformer evaluation details

The full results of our evaluation are shown in Figure B.1.

B.1 Evaluation data

We evaluate the performance of our transformers on several distributions:

-

We evaluate the root task performance of our transformers on a dataset . Note that is not the training distribution for models with task diversity , but, by construction, the sequences from this distribution are still in the training distribution for those models in the sense that task has positive support under .

-

.

We also evaluate in-distribution performance of the model trained with task diversity on a dataset . Note that this evaluation dataset is different for each model, and it is the same distribution from which the training dataset for that model was sampled.

-

.

As discussed in Section 4.3, we evaluate the out-of-distribution (OOD) performance of our transformers on a dataset . Note that this is technically in-distribution data (rather than OOD data) for the model trained with task diversity .

The specific sequences in , , and are sampled independently from training sequences and therefore have (almost surely) not been seen by the models during training. Each evaluation dataset contains samples.

B.2 Critical times

For a given model , we define as the step at which the loss on is minimized. We give the values of for the primary architecture in Figure 1(b).

| Task diversity | Critical time |

|---|---|

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k | |

| k |

Appendix C Trajectory PCA details

As described in Section 4, we perform PCA on finite-dimensional in-distribution approximations of the functions implemented by each transformer at various checkpoints over training. Note that this methodology can be used to study joint trajectory PCA of any family of trajectories that need not be variations of a training distribution; for example, different random seeds of the same training run. The same technique could also be used to study out-of-distribution behavior by varying the input distribution for which the functional outputs are being measured.

C.1 Lissajous phenomena of trajectory PCA

Caution is required when interpreting PCA results of timeseries. It is a well known fact that the principal components of Brownian motion (i.e. a stationary diffusion process) are the Fourier modes, proven in the high-dimensional discrete case in Antognini & Sohl-Dickstein (2018), and in the continuous case in Hess (2000); Shinn (2023) building on results in Karhunen–Loève theory (Wang, 2008). This means that when these trajcetories are plotted in space for some , they appear as Lissajous curves. In practice this means that “turning points” in trajectories projected onto their first few principal components (such as the local minima on the PC1 vs. PC2 plots that characterize transient ridge) are not necessarily caused by meaningful signals in the data, and should ideally be corroborated by independent sources of information. In our case, we interpret this trend as meaningful only since it corroborates the trends in the OOD loss.

C.2 Interpretation of PC1 and PC2

The most significant axis of variation in timeseries data typically reflects the development time of the underlying process - capturing both periods of stasis and rapid change. In the canonical Brownian motion case, this manifests as a monotonic half-cosine first principal component, while more general autocorrelative processes can yield deformed versions of these Fourier modes (Shinn, 2023). For neural networks trained via SGD, this development time is naturally dictated by gradient descent steps along the loss landscape.

Indeed, since our PCA results in Figure 1 are built from outputs on , we see in the first column of Figure C.1 that PC1 strongly correlates with each model’s loss on this dataset. Thus, these loss curves effectively capture the underlying development time that PCA detects, providing a clear interpretation of PC1.

PC2, capturing the second most significant axis of variation, correlates with the distinction between dMMSE and ridge, as evidenced in Figure C.1 where the turning point of each curve coincides with its corresponding PC2 curve. These PC2-over-time curves provide finer-grained insight into when a model trends toward dMMSE compared to the OOD loss, with deviations depicted more dramatically. We further investigate this interpretation of PC2 in Appendix E.

.

C.3 Extending to components

In Figure C.2 and the right of Figure D.1 we investigate the trajectory PCA with principal components. Transient ridge is visible in with a sharp deviation occurring in the trajectory.

C.4 Effect of checkpoint distribution

In Figure C.3 we test the effect on the PCA results if checkpoints are sampled only from versus versus the combined (cf. Section A.4). Interestingly, the different sampling methods do yield fairly different results. The trajectory PCA using are similar to those of , indicating that log-sampling is perhaps sufficient to see the key features of the trajectories in the essential subspace. On the other hand, the curves obtained with are different enough as to suggest that different modes of variation dominate over linear time.

C.5 Number of contexts for PCA features

In the main body, the size of the input contexts in defining the feature space of the trajectory PCA (see Section 4) is set to . In Figure C.4 we show the convergence of the trajectories as increases. The convergence is quite fast, with already somewhat resembling those of (see Figure D.1).

Appendix D Smoothing details for loss and PC curves

We apply smoothing with a Gaussian kernel, with standard deviation 20, to each of the loss curves and PC-over-time curves for all , and . These smoothed curves are used to construct the data in Figure 1 and associated figures in Section 4. Figure D.1 shows the raw curves for comparison. The motivation for applying the smoothing is to aid in visually distinguishing similar curves, as well as to obtain a reliable estimate of each value.

Appendix E Per-token analysis

It is natural to ask, which features (columns of ) are most responsible for the transient ridge phenomenon? Given the structure of our feature space, with predictions for each regression example across contexts from , we expect an implicit structure dictated by token positions. As shown in Figure 1(a), most of the variance across is driven by early tokens , where dMMSE and ridge predictions differ most significantly.

To verify this, Figure 1(b) examines the contribution of each token to each PC by calculating the average loading magnitude. For PC and token , the average loading magnitude is

| (10) |

where is the loading of PC against feature , as defined in Section 4.2.

As predicted, we find that tokens contribute most to and therefore are the features that drive the transient ridge phenomenon. Interestingly, contribute strongly to , while later tokens are the main contributors to , almost inverting the pattern of , aiding in the interpretation of the sharp retreat present in (PC2, PC4) space.

This analysis suggests that the model improves its loss primarily by adjusting its computation on these early tokens, where the distinction between specialization (dMMSEM) and generalization (ridge) is most pronounced.

Appendix F LLC estimation details

We offer additional details on our use of SGLD for approximating the expectation in equation 9, which we refer to as LLC estimation. Note that this appendix also pertains to estimation of LLC at parameters from throughout training, as analyzed in Appendix G, not only fully-trained parameters, as analyzed in the main text.

F.1 Interpreting LLC estimates

For a full formal definition of the LLC, a derivation of the estimator, and experiments validating the soundness of the estimation technique in simpler models, we refer the reader to Lau et al. (2025).

For our purposes, it suffices to note that the form of the estimator can be intuitively understood as a degeneracy-aware effective parameter count—the more degenerate directions in the loss landscape near , the more ways for the SGLD sampler to find points of low loss, the lower the estimate.

F.2 Estimating the LLC with SGLD

We adopt the approach of Lau et al. (2025) in using stochastic gradient Langevin dynamics (SGLD; Welling & Teh, 2011) to estimate the expectation value of the loss in the LLC estimator. For a given weight configuration , we generate independent chains, each consisting of steps. Each chain produces a sequence of weights . We then estimate the expectation of an observable using:

| (11) |

For the estimations studied here, we include a burn-in period. Within each chain (omitting the chain index for clarity), we generate samples as follows:

| (12) | ||||

| (13) |

where the step is computed using the SGLD update:

| (14) |

For each step , we sample a mini-batch of size and use the associated empirical loss to compute the gradient. We follow Lau et al. (2025) in recycling the mini-batch losses computed during SGLD for the expectation average.

F.3 SGLD hyperparameter tuning

For local learning coefficient estimation, we sample independent chains with 4000 steps per chain, of which the first 2500 are discarded as a burn-in, after which we draw observations once per step, at a temperature , , and , over batches of size . Local learning coefficient estimation takes on the order of a single TPU-hour per training run.

| Hyperparameter | Category | Description/Notes | Values |

|---|---|---|---|

| C | Sampler | # of chains | 8 |

| Sampler | Total # of SGLD draws / chain | 1500 | |

| Sampler | # of burn-in steps | 2500 | |

| SGLD | Step size | 0.00005 | |

| SGLD | Localization strength | 0.01 | |

| SGLD | Effective sample size (/inverse temperature) | 30 | |

| SGLD | The size of each SGLD batch | 1024 |

To tune and , we perform a grid sweep over , and over a small subset of training runs and checkpoints, and plot the LLC estimate as a function of , as depicted in Figure F.1. Within these plots, we look for a range of hyperparameters for which is independent of (which shows up as a characteristic plateau). This shows up as an “elbow” in Figure F.1 (Xu, 2024). Subject to these constraints, we maximize to get as close as possible to the “optimal” , and then maximize to reduce the necessary chain length.

.

F.4 SGLD diagnostics

We employ multiple diagnostics to assess convergence and determine appropriate burn-in periods and numbers of samples for our SGLD chains.

The Geweke diagnostic (Geweke, 1992) compares the means of the initial 10% and the last 50% of the trajectory (after burn-in). Typically, values between indicate a suitable burn-in period. However, in our context, we found this diagnostic overly sensitive to minor trends, often producing large values despite minimal practical differences in LLC estimates.

To address this limitation, we introduce the Relative Percentage Difference (RPD), calculated as:

| (15) |

The RPD provides a more intuitive measure of practical significance, typically showing differences of less than 1% even when Geweke values are large.

We also use the Gelman-Rubin statistic to assess convergence across different chains. Values below 1.1 or 1.2 generally indicate suitable convergence (Gelman & Rubin, 1992; Gelman et al., 2003; Vats & Knudson, 2020).

Representative loss traces for our chosen hyperparameters are shown in Figure F.2. Visual inspection suggests healthy, stabilized traces for most runs. The final checkpoints of low- training runs appear to be an exception and are still underconverged (top-right). The RPD confirms these observations, with values below 1% for 16/25 checkpoints and below 2% for 22/25 checkpoints. The exceptions align with visually less converged cases.

Interestingly, the Geweke diagnostic suggests most chains are far from converged, with values well outside the range. We attribute this to the diagnostic’s sensitivity to minute trends when samples cluster tightly. Given the practical insignificance of these trends (as judged visually and by RPD), we opt to disregard these extreme Geweke values.

Our hyperparameter choice represents a compromise. While it is challenging to select parameters that perform optimally across all training runs, we aim for a consistent choice of hyperparameters to allow fair comparison. Based on visual inspection of loss traces, -insensitivity criteria, and these diagnostic checks, we believe our chosen hyperparameters are appropriate even if, for example, individual checkpoints may be underconverged.

Appendix G Which solutions govern development?

In Section 5, we referred to solutions and , representing ridge and dMMSEM respectively, and explained transient ridge in terms of the roles that the loss and LLC of these solutions play in the evolving loss/complexity tradeoff in Bayesian internal model selection. In this appendix, we discuss two alternative candidates for the solutions that govern the development of our transformers.

G.1 Candidate 1: End-of-training parameters

The most straight-forward assumption, adopted in the main text, is that the development of our transformers is governed by the solutions to which our transformers converge. In particular, we assume there is a parameter implementing an approximation of ridge to which high- transformers converge, and there is a family of parameters for implementing approximations of dMMSEM to which low- and intermediate- transformers converge (though high- transformers never do).

While we have shown that the in-distribution behavior of some of our transformers approximately matches that of dMMSE or ridge at convergence, it is an additional assumption to suppose that the loss and complexity of these parameters govern development. The main text outlines how, under this assumption, the loss/complexity tradeoff explanation lines up with our observations (though, since the trajectories do not converge to dMMSEM for high , we have no obvious way to estimate in that case).

G.2 Candidate 2: Intermediate parameters

We now develop an alternative model of the development of our transformers. In brief, we first note that the emergence of dMMSEM or ridge (as the case may be) is not necessarily the last significant internal development in our transformers. In particular, there may be some other parameter that governs the trajectory during the development of ridge, after which the development proceeds to be governed by the parameter to which the transformer eventually converges.

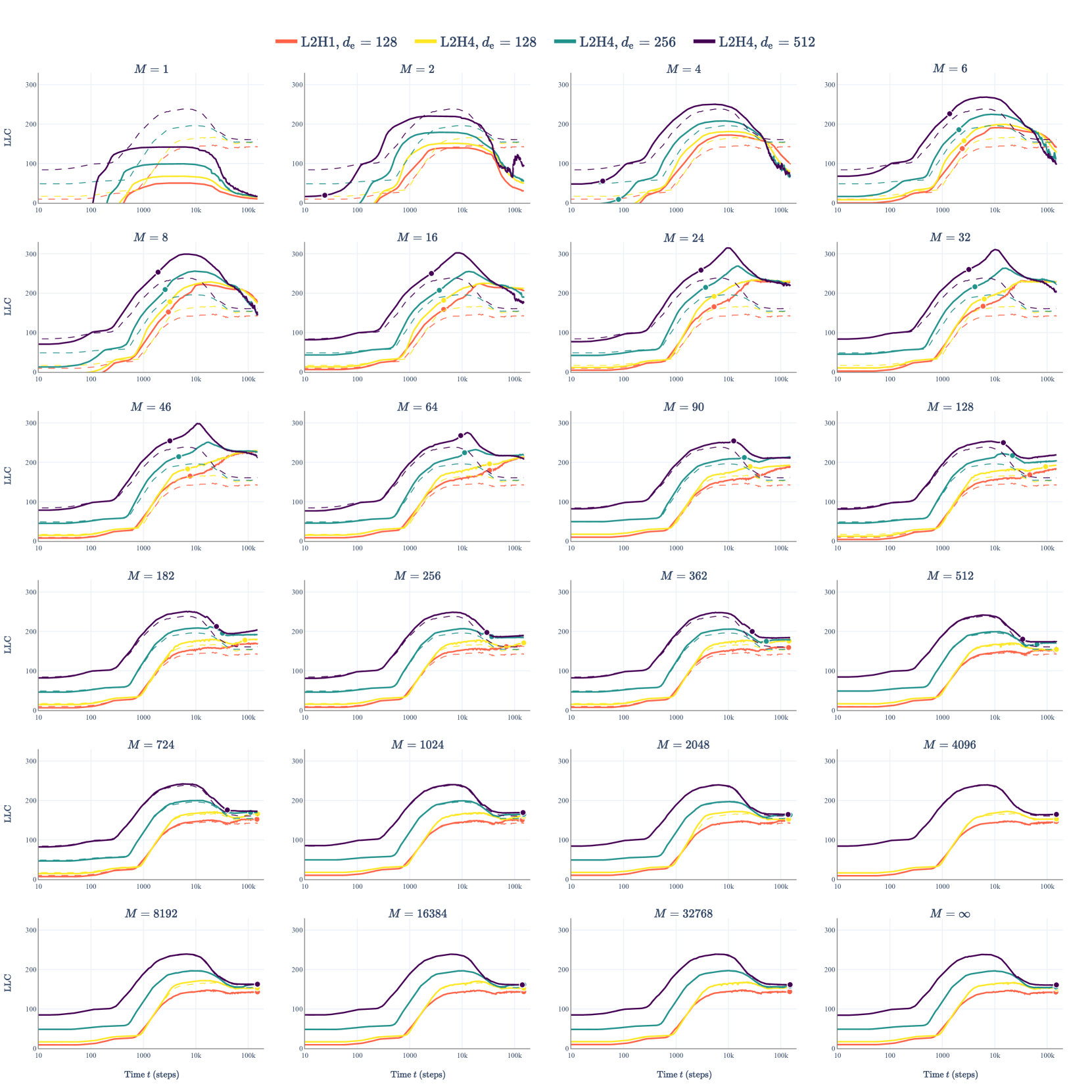

It is difficult to test this story, since we have no obvious way to access such an intermediate governing parameter and measure its LLC. However, we can gain some insight by applying a different methodology of monitoring the LLC of our transformers over the entirety of their development, on the assumption that we will see changes in the LLC playing out during the development. This methodology has been pioneered by Hoogland et al. (2024), though it is not without its own limitations as discussed by Hoogland et al. (2024)—in short, we note that while arbitrary transformer checkpoints may not correspond to local minima, the LLC estimator of Lau et al. (2025) is well-defined for any parameter, and with sufficient hyperparameter calibration (particularly the localization strength) we obtain stable estimates. See Appendix F for more details on LLC estimation calibration.

Thus, we estimate the LLC over training for our family of models of varying . Before analyzing the LLC curves for our models, we recall in some detail the results from Hoogland et al. (2024), who studied similar in-context linear regression models in the case, and found that their development can be divided into several developmental stages, including the following:

-

During the first developmental stage, the transformer rapidly learns to predict approximately zero for every context. Since , this is the optimal context-independent prediction.

-

During the second stage, the transformer begins to make use of the context for its predictions, coinciding with a significant LLC increase.

-

During the third and fourth stages, the transformer’s behavior on in-distribution inputs remains roughly stable, but it specializes to Gaussian-distributed tasks, sacrificing its performance on extremely rare tasks with high magnitudes. These stages coincide with LLC decreases.

Hoogland et al. (2024) also observed that in the third and fourth stage, various internal modules in the transformer “collapse,” focusing their computation on a subset of dimensions. Therefore we refer to these stages as “collapse” stages. We reproduce two indicative figures from Hoogland et al. (2024) for comparison with our own models in Figure G.1. Note that, compared to our architecture, Hoogland et al. (2024) used a 2-layer transformer with a smaller embedding size and so a much smaller number of parameters, and they also used different training parameters including the total number of training steps.

Figure G.2 shows the results of LLC estimation over time for our models. As did Hoogland et al. (2024), we observe that the LLC trends for our transformers can be characterized as a series of LLC changes (gradual increases or decreases) that are punctuated by brief plateaus. Apart from very small , all models have an early plateau, perhaps corresponding to learning an extremely simple context-independent predictor before moving on to more advanced predictors. All models also undergo a parallel decrease in LLC towards the end of training, possibly coinciding with a similar “collapse” of internal weights as studied by Hoogland et al. (2024). After that, for high , the curves follow the development of the case shown in yellow (cf., also, Figure G.1) for the remainder of training.

The interesting trends are in the deviation for intermediate values from this baseline. For some early intermediate values, at around the time of minimum OOD loss, the LLC “spikes.” For these , there is a neat interpretation of this increase as corresponding to a transition between a low-complexity proto-ridge solution and a high-complexity proto-dMMSEM solution, following the same explanation assumed to cover the entirety of training in the main text.

For the later-intermediate values, these “spikes” in LLC appear less pronounced, or not at all (though the LLC still appears to be elevated with respect to the baseline). The final LLC still ends up at elevated compared to the final LLC from the case. However, there is no clear sign of a transition from a proto-ridge solution to a proto-dMMSEM solution with an increased LLC to explain this. In these cases, we note that happens close to or within the “collapse” stages that affect a general downturn in the LLC for all . It is possible that any competition between ridge and dMMSEM as it effects the LLC is obscured from our analysis by other developments in the model. Future work could attempt to understand and then prevent this collapse and study the within-training complexity dynamics more clearly.

Appendix H Why does transience stop?

If the development of our transformers were to be governed exactly by Bayesian internal model selection, with increased training corresponding to increased samples, then the explanation predicts that for all , a higher-complexity but lower-loss solution should eventually be preferred. Instead, we see a task diversity threshold as originally observed by Raventós et al., 2023 at which transience ends. In this section, we briefly consider several possible explanations for this phenomenon.

H.1 Model capacity constraints

We note that a dMMSEM solution can only compete with the ridge solution if it is a faithful enough approximation of dMMSEM that it actually achieves lower loss than the ridge solution. There is certainly some sufficiently large such that the best realizable approximation of dMMSEM for our architecture is insufficiently competitive, at which point, transience will end. We note that Raventós et al. (2023) and Nguyen & Reddy (2024) draw similar conclusions about the potential role of model capacity in explaining the task diversity threshold.

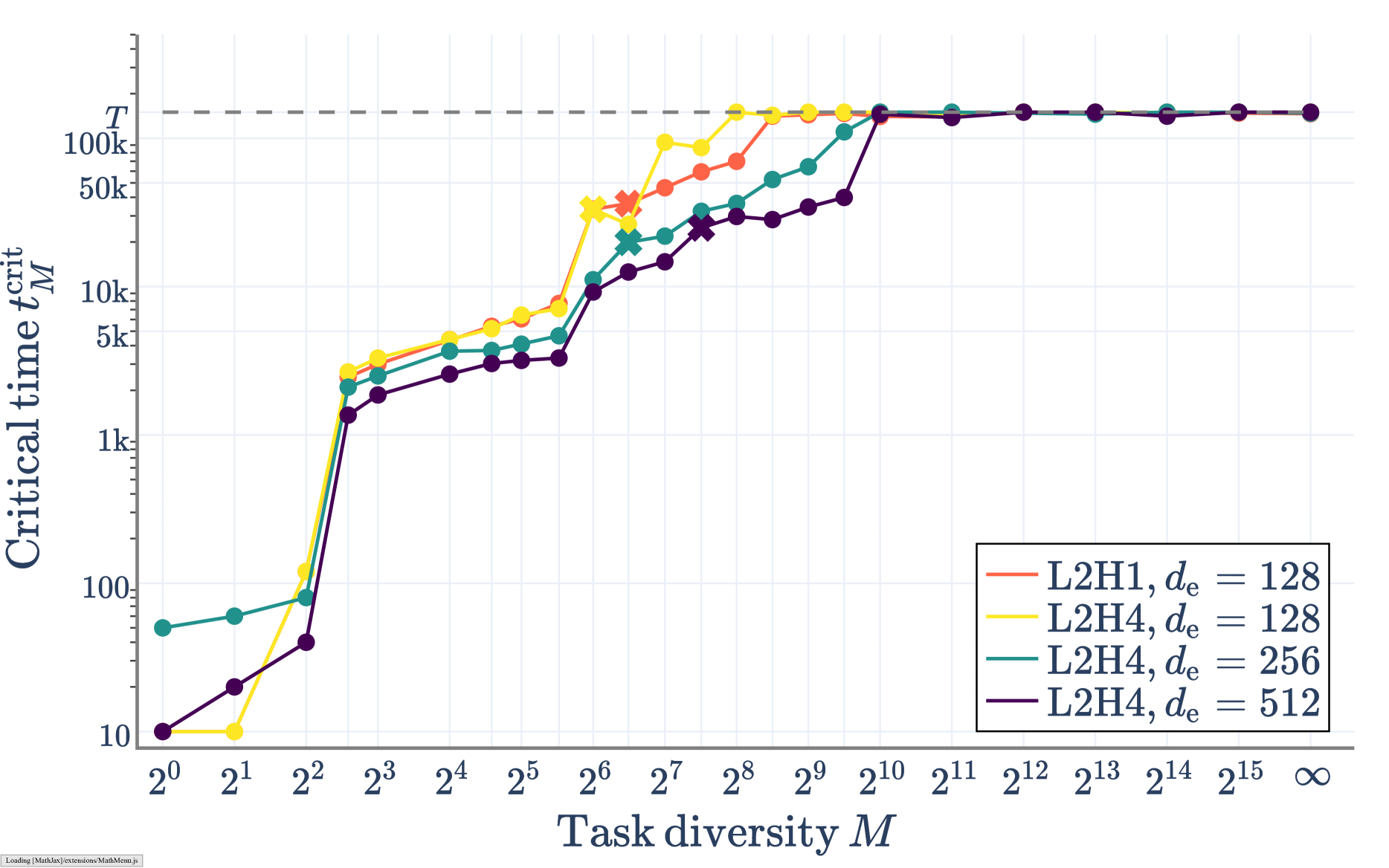

In our case, this would require dMMSEM to be unrealizable by or so. We do not rule this out, though our primary architecture has around 2.65 million parameters. We note that smaller model architectures show slightly lower task diversity thresholds (Appendix I), indicating that model capacity may be somewhat involved.

H.2 Under-training

Bayesian internal model selection predicts that, under the stated assumptions, there will be some amount of data beyond which the lower loss but more complex solution will be preferred, but the lower complexity will be preferred for all lower . By analogy, it is possible that we have not trained our transformers for enough steps to incentivize them to specialize.

We do observe that the critical time (the step of minimum OOD loss) increases with (see Section B.2). However, we note that the critical time is not near the end of training around the task diversity threshold.

H.3 Neuroplasticity loss

It’s also possible that not all time-steps contribute equally to the “effective amount of samples” driving the tradeoff. In particular, it’s possible that during the training of our transformers there is a brief window of opportunity or “critical period” during which they are governed by principles similar to Bayesian internal model selection, and after which they are either governed by similar principles on a slower timescale, or are resistant to further developments.

Some preliminary support for this hypothesis in our case can be seen in the LLC-over-training analysis provided in Appendix G, wherein we compare the development of complexity in our model to the detailed analysis of the case conducted by Hoogland et al. (2024). We observe that the task diversity threshold coincides very roughly with the overlap between the progressively increasing and a general downturn in LLC estimates that affects all models. Hoogland et al. (2024) observed a similar LLC downturn at a similar stage of training and, analyzing the internals of their transformer (with smaller but somewhat similar architecture to ours) they found that many of the weights in various internal modules of the transformer “collapse” during this downturn, which could make further development difficult.

H.4 Non-Bayesian pre-training

We note that Raventós et al. (2023) and Panwar et al. (2024) cast the task diversity threshold as an example of “non-Bayesian in-context learning,” due to the failure of the transformer to adopt the optimal Bayesian solution derived from the pre-training data distribution for in-context learning. In contrast, the perspective of Bayesian internal model selection casts the task diversity threshold as an interesting example of “non-Bayesian pre-training,” in which the transformer fails to learn following the dynamics that would coincide with the evolution of the Bayesian posterior with increasing data.

As we have discussed, it is unclear to what extent neural network development is governed by principles similar to Bayesian internal model selection, which is a formal mathematical model of Bayesian inference in neural networks, not of stochastic gradient-based optimization. It is plausible based on the perspective of nonlinear dynamics that degeneracy (such as measured by the LLC) should play a role in governing system trajectories during stochastic gradient-based optimization, and our work contributes empirical evidence that this is the case. However, there are still various gaps in our understanding of the principles governing the development of neural networks. The true “dynamic internal model selection” principles, once discovered, may reveal a natural explanation for the end of transience, leaving nothing further to be explained.

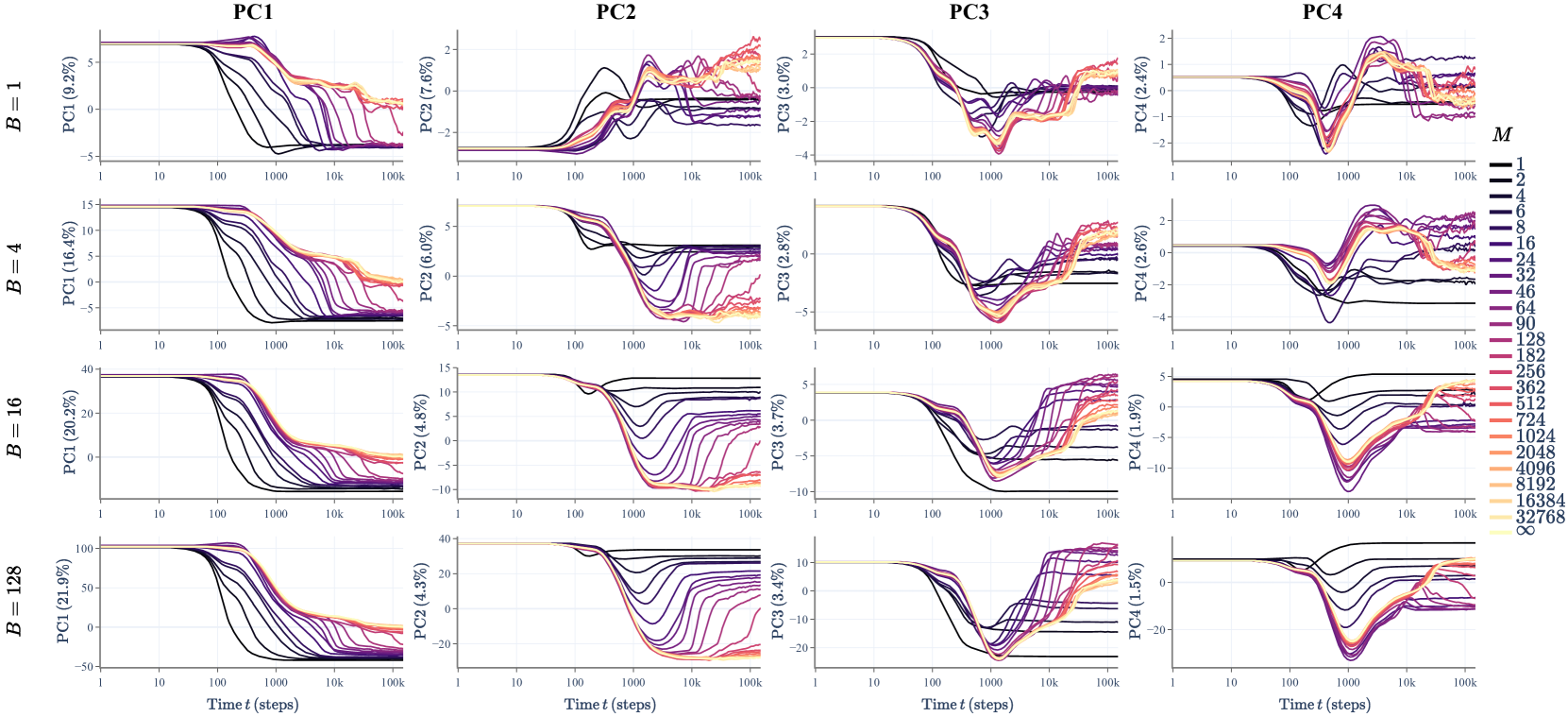

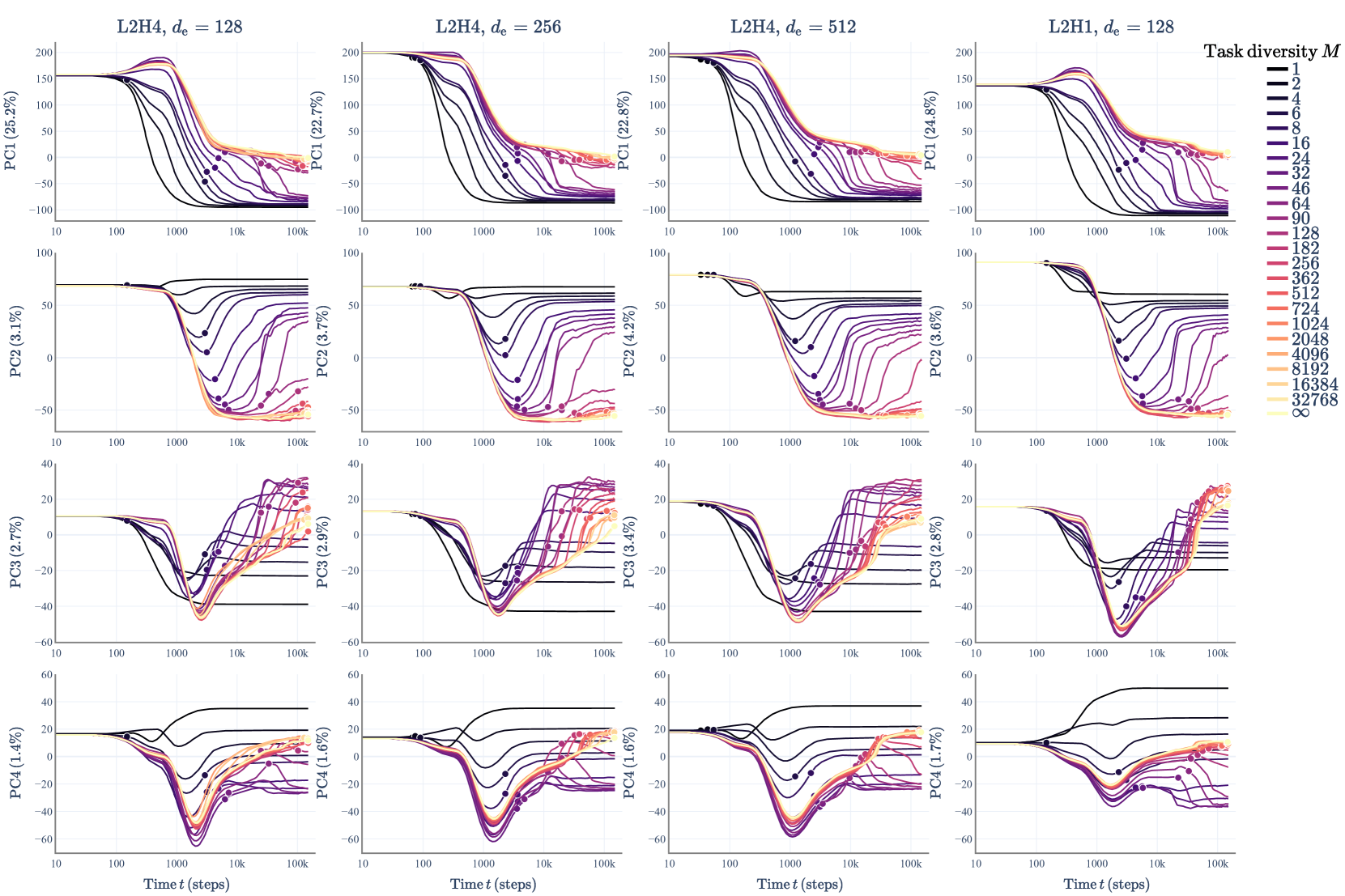

Appendix I Experiments with additional architectures

Section A.1 details the architecture hyperparameters used for the primary architecture used in our main analysis. In this appendix, we report OOD loss, joint trajectory PCA, loss and LLC estimates and LLC-over-time analysis (see Appendix G) for three additional transformer architectures (with smaller numbers of heads and/or smaller embedding/ML dimensions). The three additional architectures we study are as follows:

-

layers, head, dimensional embeddings. The OOD loss and trajectory PCA results are in Figure I.2. The loss, LLC, and LLC-over-time results are in Figure I.6.

-

layers, heads, dimensional embeddings. The OOD loss and trajectory PCA results are in Figure I.3. The loss, LLC, and LLC-over-time results are in Figure I.7.

-

layers, heads, dimensional embeddings. The OOD loss and trajectory PCA results are in Figure I.4. The loss, LLC, and LLC-over-time results are in Figure I.8.

For comparison, we also reproduce Figures 1, 3 and G.2 for the primary architecture with layers, heads, dimensional embeddings. The OOD loss and trajectory PCA results are in Figure I.5. The loss, LLC, and LLC-over-time results are in Figure I.9.

We provide a comparison of the PC over time curves for each architecture in Figure I.1.

In Figure 10(a), we plot values (Section B.2) for all for all four architectures. We see that within each architecture, increases with . In Figure 10(b) we plot the final loss and final LLC of all architectures for comparison, which shows that the task diversity threshold increases slightly with architecture size. All four architectures exhibit qualitatively similar behaviors as shown by these two figures.

In Figure I.11, we plot LLC over time curves for easy comparison between architectures for each fixed , as well as the respective “baseline" of the model. While we mostly see similar behavior across architectures, there are subtle differences visible in these complexity dynamics, suggesting that specific architectural choices may influence the developmental trajectory of these models in meaningful ways.

[appendix]

Cite as

@article{carroll2025dynamics,

author = {Liam Carroll and Jesse Hoogland and Matthew Farrugia-Roberts and Daniel Murfet},

title = {Dynamics of Transient Structure in In-Context Linear Regression Transformers},

year = {2025},

url = {https://arxiv.org/abs/2501.17745},

eprint = {2501.17745},

archivePrefix = {arXiv},

abstract = {Modern deep neural networks display striking examples of rich internal computational structure. Uncovering principles governing the development of such structure is a priority for the science of deep learning. In this paper, we explore the transient ridge phenomenon: when transformers are trained on in-context linear regression tasks with intermediate task diversity, they initially behave like ridge regression before specializing to the tasks in their training distribution. This transition from a general solution to a specialized solution is revealed by joint trajectory principal component analysis. Further, we draw on the theory of Bayesian internal model selection to suggest a general explanation for the phenomena of transient structure in transformers, based on an evolving tradeoff between loss and complexity. We empirically validate this explanation by measuring the model complexity of our transformers as defined by the local learning coefficient.}

}